Definition and Problem Overview

A social media stream refers to a feed or wall of content gathered from various digital platforms and then streamed through a specific online channel. Such a channel can be a digital signage, social media aggregator, or a similar outlet: some examples include YouTube Live, Hootsuite, and so on. A physical informational kiosk can also play this role.

The difference between a social media stream and a livestream is that the former encompasses a range of pre-recorded, edited, and curated data: news, weather forecasts, quarterly reports, event covering, advertising, etc.

This means that it can be digitally tempered without the broader audience noticing the forgery, which can lead to unwanted or even disastrous consequences.

Livestreaming, at the same time, is not completely secure from deepfake interference, either. As is already becoming popularized in China, digital avatars of real-life influencers can be “trained” to promote various products on e-commerce platforms like Taobao or Kuaishou — essentially “automating” media personalities to increase post counts and extend their audience reach. According to the report by MIT Technology Review, it takes only a few minutes from a video sample and $1,000 to forge a clone. Tools akin to SwapStream also allow face swapping during a live broadcast online.

Social Impact of Deepfake Technology on Social Media

The negative impact of digitally altered content has been discussed at length by many authors and researchers, including Chesney and Citron, Jack Clark, Samantha North, Nicholas O’Donnell, and others. The potential threats can be grouped into a few classes:

- Political. Disinformation, slender, and truth distortion can be used to spread rumors, manipulate elections, spark civil unrest, or justify violence. Examples include the deepfake video confession used by the military junta against Myanmar's chancellor Aung San Suu Kyi and a fabricated erotic video targeting Indian journalist Rana Ayyub.

- Economic. Deepfake content can cause far-reaching damage to companies and financial institutions, from besmirching reputation on business review platforms like Yelp to subverting an initial public offering. A bad actor could commit corporate sabotage by producing a deepfake of a company’s CEO announcing the cancellation of a merger or a high-profile resignation. Deepfakes can also be used to disrupt the entire market — A real-life case happened in May 2023, when an AI-generated picture of an alleged explosion next to the Pentagon went viral and caused panic on the stock market.

- Legal. AI-manipulated media is widely employed in a variety of criminal schemes: CEO fraud, romance scams on dating platforms, blackmailing, breaching biometric security with impersonation (spoofing), producing fake evidence, and others. In February 2024, scammers managed to steal $25 million from a finance worker at a multinational firm using a deepfake video call.

- Social and moral. Deepfakes can also be involved in harassment, online bullying, conflict instigation, and other similar scenarios. Deepfakes have already been widespread in “revenge porn,” manufacturing nude photos and videos to attack the target’s reputation, cause shame, or even blackmail them. A deepfake-based case of bullying occurred in Almendralejo, Spain, when an AI-powered app was used to undress school students in their photos, which then were published on social media.

Researchers’ concerns surrounding the possibilities of deepfakes can be summed up with the famous quote from Marshall McLuhan: “The medium is the message because it is the medium that shapes and controls the scale and form of human association and action”.

Categorizing Fake Media

Generally, synthesized media is divided into 4 main categories: Images; Video; Sound; and Hybrid Multimedia, in which the three previous types are mixed.

1. Fake Images

According to a 2022 review, detecting fabricated pictures is still the biggest challenge due to their static nature: video and audio deepfakes still tend to leave noticeable artifacts — compression noises or unnatural distortion — that expose their true nature. Since fake photos lack those telltale indicators, detecting a fake photo with a naked eye is quite challenging, if not impossible.

Such images are mostly synthesized with Generative Adversarial Networks (GANs), diffusion models that add structured noise layers to the initial image and then reverse the process to capture and reconstruct its subtle details and patterns, as well as other methods.

2. Fake Videos

Currently, the majority of fake videos boil down to facial deepfakes, as they are the easiest to produce and may help achieve certain goals. However, the possibility of architecting entire scenes and environments should not be excluded, as Text-to-Video (T2V) tools capable of producing highly realistic output can emerge in the future, with Make-A-Video being a prime example.

Creating such a video is fairly accessible to anyone, as several GAN-based face-swapping apps are widely available: Face Swap Video, ReFace, FaceMagic, and others. A more sophisticated tool, DeepFaceLab 2.0, provides an extensive guide on how to produce a high-grade deepfake on a home computer.

3. Fake Audio

So far, audio deepfakes have proven to be a devastating force in terms of corporate scams, especially in scenarios where it is not feasible to critically analyze the audio in real time. A prime example is the UAE case where a caller used an audio deepfake to authorize a transfer during a phone call, resulting in a loss of $35 million. Audio deepfakes also have the potential to bypass voice verification systems, which could have devastating financial consequences.

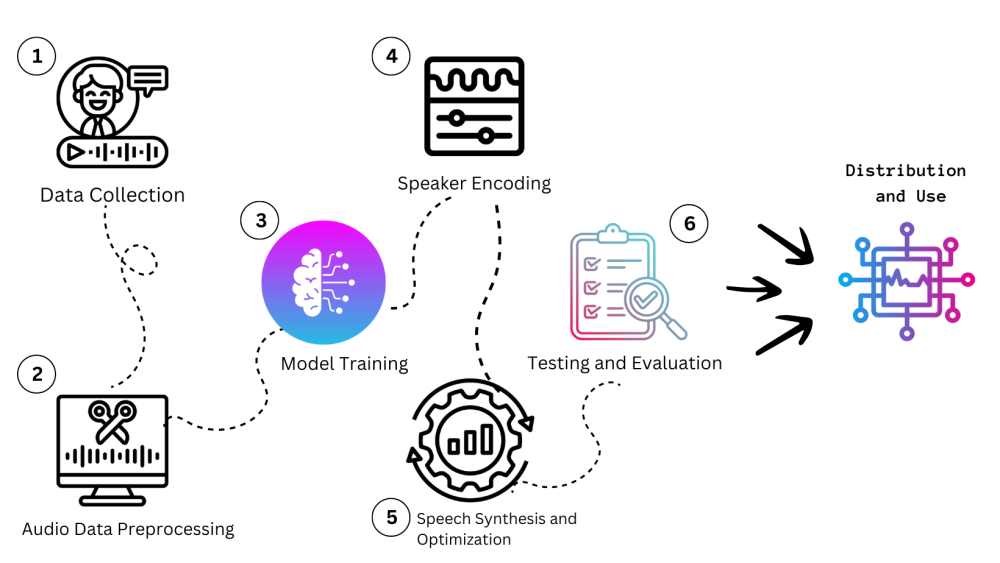

Voice cloning and realistic audio synthesis can be achieved with a few solutions. Among them is a parametric text-to-speech generator WaveNet, which is based on the stacking of casual convolutions that keep the strict order of temporal data (as output and input share the same time dimensionality). As a result, it can produce highly realistic voices that can be modified with accents, intonations, and other speech quirks. Other examples include DeepVoice 3, which is based on a fully-convolutional character-to-spectrogram model.

4. Fake Hybrid Multimedia

Finally, if all the previous types of fake content are combined, they can produce an explosive effect by providing a fully immersive picture for the viewer. For instance, it’s possible to synthesize an ultra-realistic news report covering events that never actually occurred. This is especially dangerous considering that social media provide the elements of virality and shareability.

Detection Tools and Platforms

Many cutting-edge forensic solutions have been proposed as a response to the deepfake meteoric rise. Among them are:

1. FakeCatcher

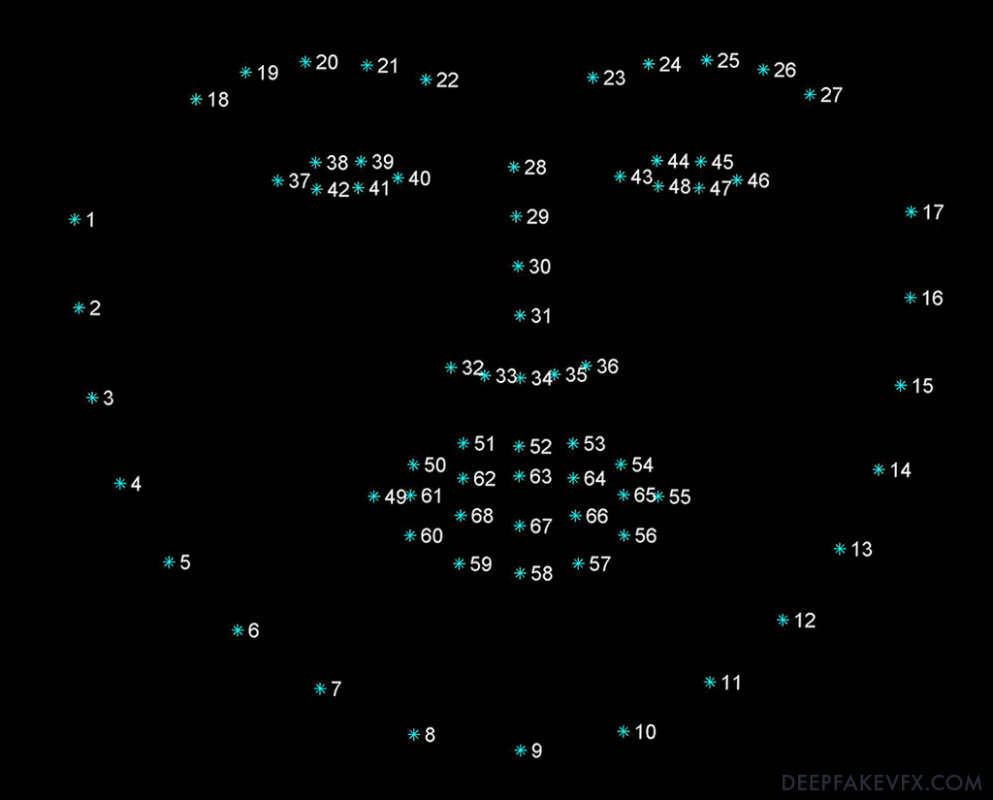

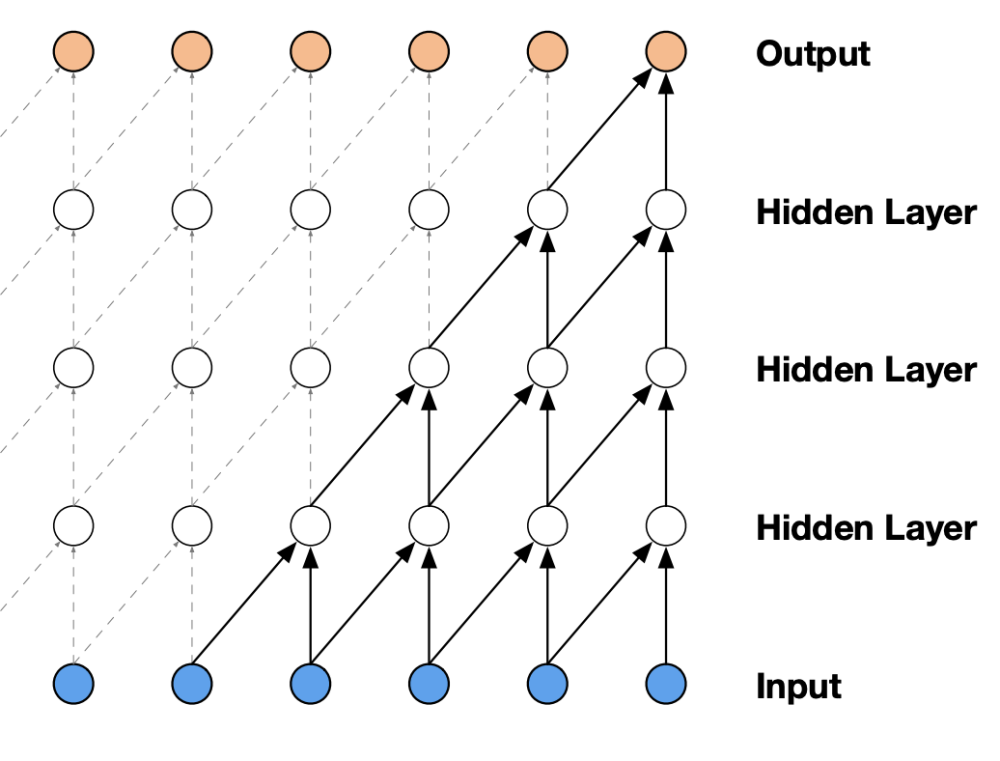

Developed at Intel, FakeCatcher operates by detecting biological signals naturally inherent to the human face. Namely, it employs the plethysmography principle, which analyzes color shifts in human skin caused by the blood flow and breathing. To improve detection accuracy, it also includes a Convolutional Neural Network (CNN) that boosts the effectiveness of a generalized classifier for fake content.

2. Deepfake-o-Meter

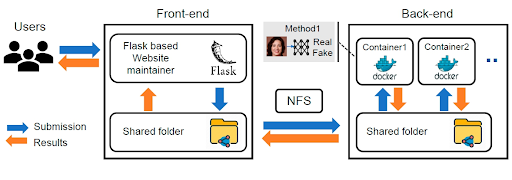

Deepfake-o-Meter is an open-source platform for checking online content. In essence, it serves as a hub that combines a repertoire of detection tools: CNN-based MesoNet that analyzes mesoscopic characteristics of an image, XceptionNet model, deepfake classifier Capsule based on VGG19, and others.

3. Proof of Humanity

Proof of Humanity, or PoH, is a project that seeks to prevent Sybil attacks — in which a single person pretends to be multiple people. It is suggested that PoH should be based on a zero-knowledge proof protocol to provide anonymity, and it can help avoid fraud in multiple areas: smart voting, token distribution, reputation systems, social payments, etc.

For more info about deepfake detection tools please refer to this article.

Detection Methods and Algorithms

New detection methods have been proposed to identify falsified content in a timely manner, especially in response to fake content posted on social media.

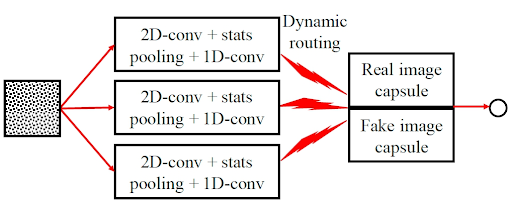

A capsule network architecture is composed of three main capsules and two output capsules for fake and real images. It employs latent feature extraction and dynamic routing to the capsules, thereby detecting face swaps, facial reenactment, and fully synthesized images with a nearly 100% accuracy.

EasyDeep is a solution specifically developed for detecting forgeries on social media. It focuses on such parameters as Shannon entropy, Haralick’s texture features — contrast, dissimilarity, homogeneity, energy, correlation — as well as image histogram learning with the LightGBM classifier to make the solution usable on regular gadgets.

Other techniques include FAMM, which focuses on unnatural facial muscle motions; temporal consistency analysis that utilizes temporality-level stream/temporal correlation features extraction; and a combination of a CNN-based ResNeX and Long Short-Term Memory (LSTM) for learning order dependence that allows detecting deepfake videos with a high accuracy.

Deepfake Source Identification

An algorithm named DeSI offers a way to track sources of deepfakes posted on X (formerly known as Twitter). It filters out text tweets, focusing only on posts that contain media. Then, it employs a system of queries and analyzes retweets, user IDs, and other similar data to track down the initial source of false media.

Strategies and Countermeasures Against Deepfakes in Social Media

There are several general guidelines that researchers have suggested for tackling deepfake threats. In particular, authors have emphasized the importance of promoting digital literacy and awareness among regular users — especially older users and those in the remote regions. One possible aid to this goal is making deepfake detection solutions more accessible to the general public.

It’s also suggested that platforms can take precautions to limit the spread of deepfakes, such as enhancing policies on social media platforms and building authentication systems that will allow identifying content’s origin and genuine authorship.

To learn more about advances in deepfake detection tools, as well as how these tools work and how they are tested, read on in our next article.

Antispoofing

Antispoofing