Deepfake Forensics: Definition and Overview

Deepfake forensics is a subset of digital forensics — a group of techniques and approaches to detect falsified content, especially content used for legal purposes. These methods have been in development since at least 2005, when researcher Hany Farid suggested using color filter array artifacts left by digital cameras to distinguish genuine pictures from photoshopped ones.

Deepfake forensics takes this work a step further by focusing on media fabricated with the help of deep learning. In some cases, these methods can also be used to track down cheapfakes — content produced with less sophisticated tools.

This area of forensics has become essential due to the sudden surge of widely-accessible deepfake tools that can swap faces, extract and copy body movement, mimic human voices, or even generate entire scenery — as in the “burning building” case that prompted a stock sell-off frenzy.

This highlights the importance of deepfake forensics as a reliable tool for detecting digital manipulations in evidence, exposing fraud, and averting the so-called liar’s dividend — a tactic of labeling some genuine proof caught on tape as false.

Impact of Deepfake Crimes on Law Enforcement

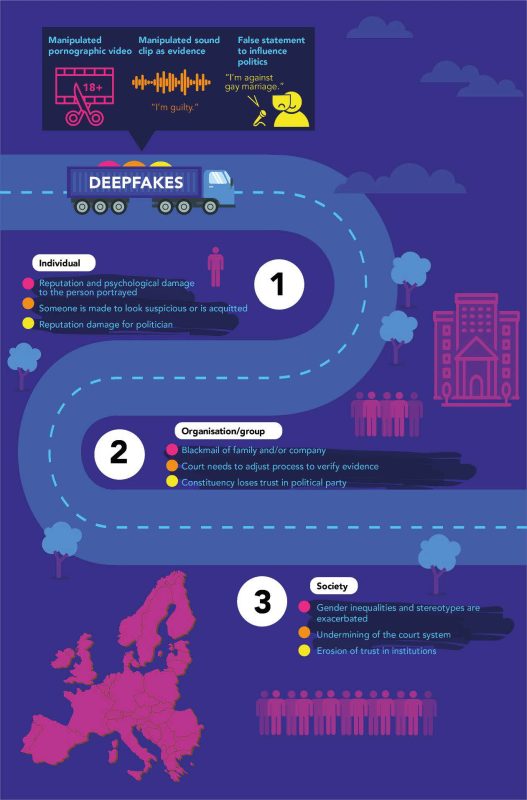

According to a 2020 analysis of the potential impact of deepfakes in the courtroom, presence of fake media may cause the following scenarios:

- Evidence. A piece of fabricated media can be created to initiate litigation or be used as a defense.

- Legal imbroglio. Unknowingly, one of the parties involved in litigation may use a piece of deepfake media as part of their testimony.

- Trust pollution. Deepfake materials may also “sneak” their way inside records of trusted and respected sources — news agencies, national archives, media centers — and later be used in a court hearing for some purpose. This could undermine the reputation of the custodian holding the records, leading to a ripple effect involving other cases.

- Reverse CSI effect. The “CSI effect” refers to the wrongful acquittal of a convict by a jury if the investigation fails to provide unrealistically impressive evidence of guilt — such as what they’re used to seeing on TV. With the possibility of deepfake evidence being introduced to the public consciousness, a “reverse” CSI effect could occur where genuine audio/video evidence may be discredited as deepfake by the defending party, in turn convincing the jurors.

The veracity of digital evidence has been disputed even before the rise of deepfakes. In the 2010 case of People v. Beckley, the court ruled that prosecutors could not use an unverified digital photo retrieved from a MySpace page.

Deepfakes on their own have already infiltrated legal practices. One notable case occurred in 2020 when a British woman presented an audio recording modified with an AI tool as evidence of her spouse’s violent behavior in a battle for child custody. She previously had no experience in using this technology and succeeded by simply watching online tutorials and using unnamed software.

A possible reality denial incident can be observed in a lawsuit against Elon Musk by the family of a former Tesla owner who died in a car crash while using the self-piloting feature of the car. They used a YouTube video of a conference where Musk comments on the feature. However, the entrepreneur’s lawyers claimed the video might be altered with a deepfake technology. The court rejected the claims, with the judge Evette Pennypacker remarking “What Tesla is contending is deeply troubling to the Court”.

Digital Evidence Awareness Among Law Enforcement Agencies

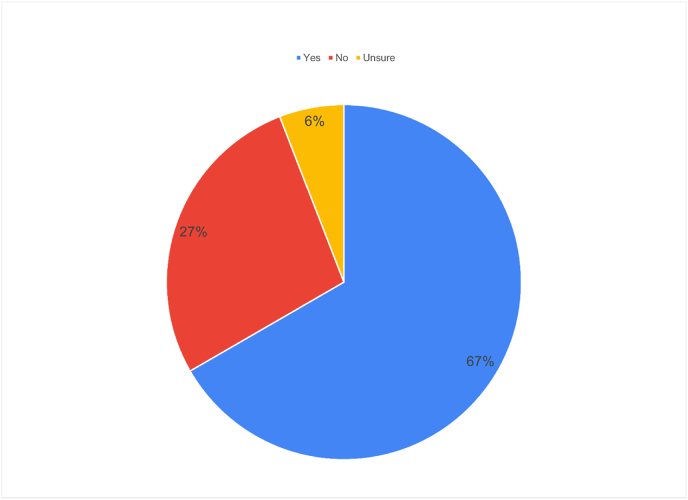

According to a 2023 survey, about two-thirds of respondent prosecutors are concerned whether digital evidence can be entirely admissible. The conclusion of the study states that to successfully counteract such types of evidence, law agencies need the following:

- Training. There is a need for digital forensic specialists who can explain how complicated deepfake technology works in simple terms for each individual case.

- Gap analysis. This involves analyzing how successful the system is at discerning fake and real digital evidence, and what needs to be done to improve it.

- Legislative advocacy. New standards and accountability should be introduced regarding the use of fake digital evidence.

- Specialized prosecutors. A cohort of prosecutors — as well as other legal staff — should be trained in digital forensics.

- Support for the science of digital forensics. Legal authorities should have access to digital forensics laboratories, specialist advice, and knowledge sources.

Potentially, increased competence among law enforcement, officers of the court, and the general public will help successfully repel and perhaps even nullify attempts at digital evidence counterfeiting.

Legislation and Regulation of Deepfakes

Jurisdictions throughout the world have begun addressing the deepfake issue almost simultaneously. The leading examples are:

- USA

The Deepfakes Accountability Act, introduced in 2019, is perhaps one of the first legislative initiatives created to combat malicious deepfake usage. The act states that any individuals with the intent to distribute deepfake material, or the knowledge that it may be distributed, must disclose that the media has been fabricated or manipulated. It further dictates that deepfakes cannot be applied in election interference or used to harm an individual’s reputation, life, or well-being. At the time of writing, the act has not yet passed into legislature.

Additionally, a number of US states prohibit revenge pornography, which means “inserting a woman's digital likeness” using a deepfake without consent. Additionally, several laws have been passed concerning interfering in elections and spreading defamation that targets candidates. The state of New York also addresses an ethical problem regarding an appearance of a deceased person recreated with machine learning.

- China

In China, all AI-powered content is required to be accompanied by a disclaimer. This applies to individuals as well as companies using it for entertainment purposes. There are also specific provisions for the deepfake technology providers.

- European Union

In the EU, a tandem of policy + regulatory framework has been developed to tame the spread of fabricated content. These include the General Data Protection Regulation (GDPR), which shields individuals from the harmful deepfake effects; the Code of Practice on Disinformation; Copyright regime; and other initiatives.

- United Kingdom

In the UK, spreading unconsented intimate materials has become illegal as of January 2024 due to the Online Safety Act. The country has also launched the initiative called “Enough,” which prevents fabricating false offensive media that targets women, who are disproportionately affected by malicious deepfakes.

Technological Tools for Deepfake Detection and Analysis

Several forensic detection methods have been developed as of today. Among them are:

- DARPA

The Defense Advanced Research Projects Agency (DARPA) designed a program called MediFor that detects manipulated media and also analyzes its digital, physical, and semantic integrity for better filtering and prioritizing options. It has an additional integrated anatomic library that contains vast data on head and facial muscles movement to expose deepfakes more efficiently.

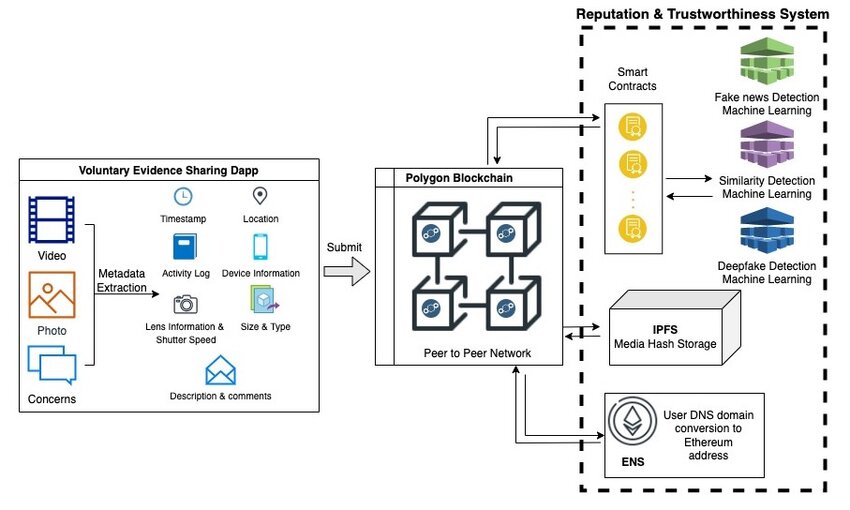

- Dapp

Dapp is a platform based on the principle of decentralized applications, which is a blockchain-like system. It uses machine learning and smart contracts to solve three pivotal problems: evidence authentication, acquisition and origin traceability of the content, and archiving/evaluation.

In simple terms, it allows people to protect their media files — such as photos or phone-captured videos — from tampering with the help of a unique smart contract. Whenever a modified file appears online, it is flagged as “fake” by the system.

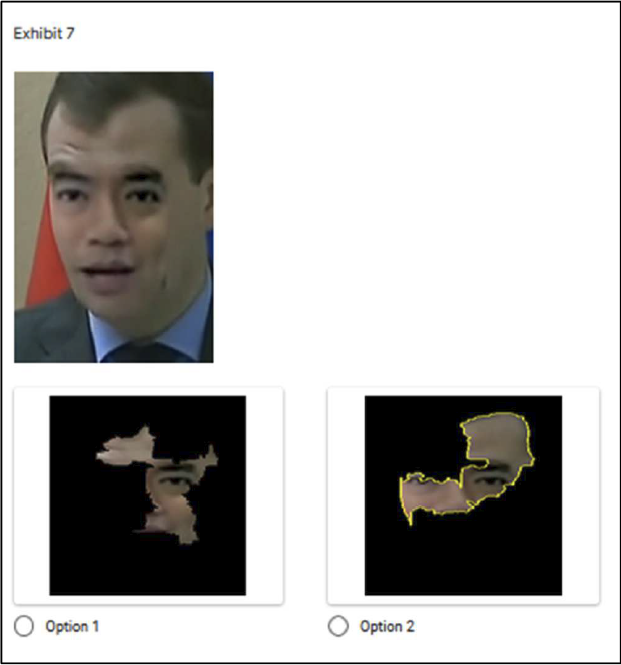

- XAIVIER

XAIVEIR is based on the XAI principle — explainable Artificial Intelligence. It is based on EfficientNet and focuses on detecting fabricated super-pixels present in a frame of a video clip. Then, information on the altered regions of the content is presented in a simple explanation.

- APATE

APATE, creatively named after a Greek goddess of deceit, is a corpus of deepfake-detection tools designed with the help of the French National Scientific Police Service. Its aim is to provide a separate detector for each individual case and every single deepfake family.

Strategies, Solutions, and Future Outlook

It is suggested that combating fake media must be a comprehensive strategy, which includes increasing public awareness, lobbying legislation aimed against nefarious use of the technology, creating regional computer crime task forces, supporting partnership with the expert/scientific community, and so on.

However, as the technology behind deepfake content becomes simultaneously more widespread, more convincing, and easier to create for nefarious purposes, it’s crucial that those involved in the justice system — law enforcement, judges, jurors, prosecutors, and so on — are aware of what detection strategies can be used to verify real content and debunk altered content. Despite the overwhelming tide of advancing technology, it is still up to these individuals to maintain the integrity of the evidence presented to the courts.

Since AI has exploded onto the scene, there has been a call for regulatory bodies to standardize how we approach these pieces of media. We have more information about AI regulation in our next article.

Antispoofing

Antispoofing