Natural Language Processing (NLP) is a component of artificial intelligence (AI), which helps it understand and utilize human speech in order to interact with real people. The dawn of NLP began in the early 1900s when Ferdinand de Saussure formulated his structuralist approach to the human language. In Cours de Linguistique Générale, posthumously co-authored with Sechehaye and Bally, he represents language as an amalgam of structures; the relationships among them help convey and reproduce meaning with spoken and written phrases.

By the early 1950s, works by various authors such as Hodgkin and Huxley or McCulloch and Pitts drew attention to the idea of replicating human interaction using a machine. For NLP, a significant milestone was reached in 1966 with the appearance of the first chatbot, ELIZA, which simulated a psychotherapist by using pattern matching and substitution.

ELIZA paved the way for other algorithms of speech synthesis and opened developers’ eyes to what might be possible with more sophisticated speech simulation.

Through the early 2010s, chatbots continued to emerge as marketers used them to communicate with large groups of people, companies began to use them to streamline their customer service processes, and more. AI chatbots emerged in widespread public use in 2016, when Facebook began allowing company pages to program chatbots to interact with customers.

However, a new era for NLP began in 2018 when GPT-1 was launched. It was based on a Generative Pre-trained Transformer language model, and the new architecture could generate huge volumes of coherent text in various genres, from casual conversation to news and comedy, or even academic writing — making the model look almost sentient.

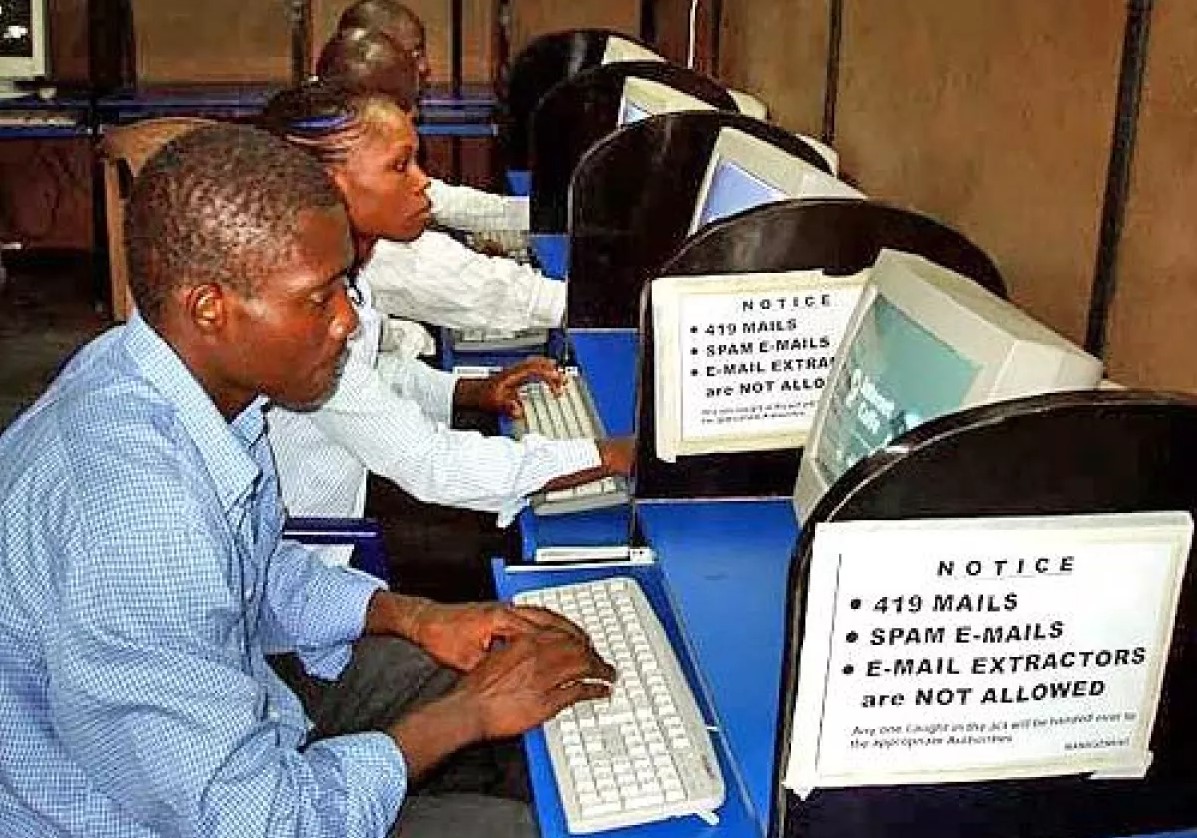

In the field of antispoofing, the use of NLP and advanced chatbots is seen as a challenge: not only can they be exploited to sow disinformation or spread hate speech, but they also bring a new level of deceit to malicious interactions.

Turing Test

A universally recognized test, known as the Turing Test, was developed to evaluate whether a machine exhibits intelligent behavior equivalent to — or indistinguishable from — that of a human.

Turing Test Definition

The test, initially called "The Imitation Game," was proposed by Alan Turing in 1950 — even though the author himself rejected the idea of a thinking machine, calling the concept “meaningless.” The Turing Test, possibly based on the Descartes’ language test, is simple: if a human, who interrogates a computer blindly, from a separated room, can mistake its answers for human phrases, the test is passed.

Turing himself wrote a prognosis on how sophisticated machines can cheat the test in the future:

“I believe that in about fifty years’ time it will be possible to programme computers, with a storage capacity of about 109, to make them play the imitation game (i.e. pass the Turing test) so well that an average interrogator will not have more than 70 percent chance of making the right identification after five minutes of questioning.”

The efficiency of the test was disputed in 1980 by John Searle. In his Chinese room argument, partly based on the Leibniz Mill, he claims that an entity — like a person who doesn’t know Chinese, but has to answer questions in this language — can successfully emulate cognition by using a database and a rule book with instructions on how to manipulate the data.

Passing the Turing Test

There were a few highly-publicized instances of AI chatbots allegedly passing the Turing Test.

Cleverbot

The first program to ever pass the Turing Test was Cleverbot, designed by Rollo Carpenter. Presumably, it passed the Turing test in 2011, in Guwahati, India. The experiment involved 30 volunteers blindly texting with an unknown partner. As a result, Cleverbot scored a 59.3% rate of humanness, while its human counterparts earned only a 63.3% score.

Eugene Goostman

Another instance of an AI beating the Turing test was announced in 2014 when a chatterbot dubbed Eugene Goostman developed by a tandem Veselov/Demchenko team. Eugene Goostman allegedly met the test’s criteria during the competition at the University of Reading.

LaMDA

Google’s LaMDA or Language Model for Dialogue Applications was reported to pass the Turing test in 2022 by generating realistic answers and displaying almost a human-like cognition according to the company’s engineer Blake Lemoine.

As a result, linguists and developers began to wonder if the test has become obsolete in the era of Large Language Models (LLMs). These LLMs simply generate the most likely words which would appear next in a conversational sequence — which was predicted by the Chinese room theory. Though these answers can appear to replicate conscious thought, experts argue that there should be new goalposts to gauge “true” intelligence.

Emerging Turing Test Alternatives

With the Turing Test being seen as more and more outdated, alternative tests have been proposed to analyze the “sentience” of AI.

Winograd Schema Challenge

Winograd Schema Challenge (WSC), inspired by the work of Terry Winograd, is a reading comprehension test based on single binary questions. These questions include:

- A pair of noun phrases.

- A possessive adjective or a pronoun.

- A referent of the pronoun or possessive adjective added to the question.

- A special-alternate word pair, which keeps the answer grammatically correct, but completely changes its meaning.

In simple terms, WSC focuses on subtle shifts in the context of a phrase. While perfectly understandable to a human being, these subtleties demand a concise and laconic answer from an AI, while giving it no space for “verbal acrobatics”.

The Lovelace 2.0

The Lovelace 2.0 test is an update to the original test named after 19th-century mathematician Ada Lovelace, which was proposed by Bringsjord, et al. Initially, the test is aimed at measuring an AI’s ability to conceive a “creative artifact” — a novel, poem, painting — as it requires a broad repertoire of human cognitive capabilities, like imagination.

The Lovelace 2.0 test makes this challenge somewhat trickier for the AI to pass by using constraints. The concept includes the following parameters:

- The artificial agent a is tasked to generate a creative artifact o of a certain type t.

- The artifact must stick to some specific constraints C with ci ∈ C expressing creative criteria that can be expressed in a natural human language — they can include form, genre, plot, featured characters, etc.

The type and criteria are selected by a human evaluator or referee r, who should reach a verdict whether the creative artifact o meets all demanded criteria successfully and looks like a realistic figment of imagination made up by an average human.

Datasets for AI Chatbots

Datasets are an invaluable resource both for collecting reference information about AI-generated texts and for training chatbots. Currently, there are seven well-known datasets for chatbots.

TuringBench

TuringBench is a benchmark environment that offers datasets, a leaderboard, and two types of tasks: Turing Test (TT), which differentiates machine and human-written texts, and Authorship Attribution (AA), which identifies the true author of a text. The goal of TuringBench is to assist researchers in identifying markers which could distinguish human-written texts from AI-generated texts.

The datasets comprise 168,612 articles — both machine-generated and gathered from published news sources. These are split into two categories — TT and AA — and are also segregated into train/validation/test sets with a respective ratio of 70:10:20.

Topical-Chat

Topical-Chat is an open-domain knowledge-grounded dataset focused on conversational topics and their potential depth and breadth. It consists of conversations among volunteers which are based on 8 popular topics — Fashion, Politics, Science, Music, and so on — with materials drawn from the Washington Post, Wikipedia lead sections, and the internet forum Reddit. The purpose of the dataset is to train a chatbot capable of meaningful conversation.

MultiWOZ

MultiWOZ is a data corpus focused on Task Oriented Dialogue (TOD), which contains 10,000 human-written dialogues that cover a constellation of topics. The primary concept of MultiWOZ is to help an AI learn to mimic natural conversation from a corpus of human-to-human dialogues.

DailyDialog

DailyDialog is a multi-turn dataset which includes conversation samples dedicated to mundane topics of everyday life. Its goal is to explore emotional and empathic content of the human conversation through its 13,118 dialogues.

ConvAI2

ConvAI2 is a publicly available dataset based on the Persona-Chat database with 131,438 examples, 17,878 dialogues, and 1,155 personas. The goal of ConvAI2 is to provide data regarding conversation quality to aid in chatbot training and development.

SQuAD 2.0

Stanford Question Answering Dataset or SQuAD is a reading comprehension dataset, materials for which were drawn from Wikipedia articles and turned into questions and answers (also called spans — segments from an article’s text). Of SQuAD’s 100,000 question and answer pairs, over half are unanswerable questions. The goal of SQuAD is to improve chatbots’ ability to determine when no answer is available.

GLUE

The General Language Understanding Evaluation, or GLUE, is a comprehensive system focused on exploring natural language generation. It includes sentence-pair language understanding tasks, an analytical dataset dedicated to various linguistic phenomena, and a leaderboard for models tested with GLUE.

Methods and Experiments

A variety of chatbot detection methods have been developed. Among them is the adversarial Turing Test (ATT), which is based on two main elements: defending descriptors and attacking generators. The model includes supervised learning, machine-generated hypotheses, reinforcement learning, and other components. These culminate in the iterative attack-defense game capable of identifying machine text.

Other methods include training an AI-judge on a Word2Vec embedding and even orchestrating a massive online chat-session game with 1.5 million participants, in which the Turing Test and its reversed version — with people pretending to be chatbots — interact. The goal of the game was to scrutinize indicators typical to the chatbot speech. As the results showed, humans were capable of detecting 68% of chatbot messages at best.

Notable Cases of Chatting with Machine Usage

During an inverse Turing test, ChatGPT was asked whether it could pass it, and what parameters it would use to develop its own version of the test. The response was rather vague and deflecting in nature. However, it came up with a series of questions when prompted to develop its own humanness test.

Manual Detection

LLM-based solutions can adapt a more realistic speech style and masquerade to be a living human more satisfyingly. According to Jesse McCrosky — a Mozilla Foundation’s data scientist — even AI-based detectors can fail at spotting adaptive synthetic texts.

In order to prevent the public from falling for fraudulent chatbot messages, it’s important to highlight some ways people can attempt to distinguish a chatbot from a human.

To detect a chatbot manually, it is suggested that people pay attention to:

- Monotony in answers.

- Overly quick response speed.

- Stiff and unnatural tone of speech.

- Grammar and punctuation that is flawless or even overly proper.

- Logical faults and blunders in longer narration.

Chatbot technology is just one aspect of AI that fraudsters can use to deceive their targets. Social media is now full of “cheapfakes” which involve deceptive imagery. To read more about cheapfakes and how to detect them, check out this article.

References

- Ferdinand de Saussure, linguistique mon amour

- Ferdinand de Saussure, From Wikipedia

- Structuralism

- Hodgkin–Huxley model, From Wikipedia

- McCulloch-Pitts Neuron — Mankind’s First Mathematical Model Of A Biological Neuron

- ELIZA: a very basic Rogerian psychotherapist chatbot

- TURINGBENCH: A Benchmark Environment for Turing Test in the Age of Neural Text Generation

- Improving Language Understanding by Generative Pre-Training

- This Chinese Lab Is Aiming for Big AI Breakthroughs

- The Turing Test

- Alan Turning

- Descartes's Language Test and Ape Language Research

- The Turing test: AI still hasn’t passed the “imitation game”

- The Chinese Room Argument

- Leibniz’s Mill Argument Against Mechanical Materialism Revisited

- John Searle interview on the Philosophy of Language (1977)

- Software tricks people into thinking it is human

- Computer AI passes Turing test in 'world first'

- Eugene Goostman

- EG Homepage

- LaMDA: our breakthrough conversation technology

- Google’s AI passed a famous test — and showed how the test is broken

- Blake Lemoine: Google fires engineer who said AI tech has feelings

- What are Large Language Models

- Blake Lemoine. Software Engineer and AI Researcher

- Terry Winograd, From Wikipedia

- WSC (Winograd Schema Challenge)

- Creativity, the Turing Test, and the (Better) Lovelace Test

- TuringBench, The Turing Test Benchmark Environment

- DailyDialog: A Manually Labelled Multi-turn Dialogue Dataset

- Topical-Chat

- MultiWOZ -- A Large-Scale Multi-Domain Wizard-of-Oz Dataset for Task-Oriented Dialogue Modelling

- ConvAI2

- SQuAD2.0. The Stanford Question Answering Dataset

- GLUE benchmark

- Human or Machine? Turing Tests for Vision and Language

- Word2Vec, From Wikipedia

- Human or Not? A Gamified Approach to the Turing Test

- ChatGPT believes it is conscious

- Did ChatGPT Write This? Here’s How To Tell

Antispoofing

Antispoofing