How Accurate Are AI-Generated Text Detectors?

AI text detectors aim to distinguish written content generated with deep neural models: GPT, RNN, GAN, and others. Even though such detectors have been developing in parallel with the content generators themselves, their accuracy still remains a debatable topic. According to a 2023 study, an OpenAI classifier tool could detect only 26% of an AI-written text.

This is due to the AI’s capabilities:

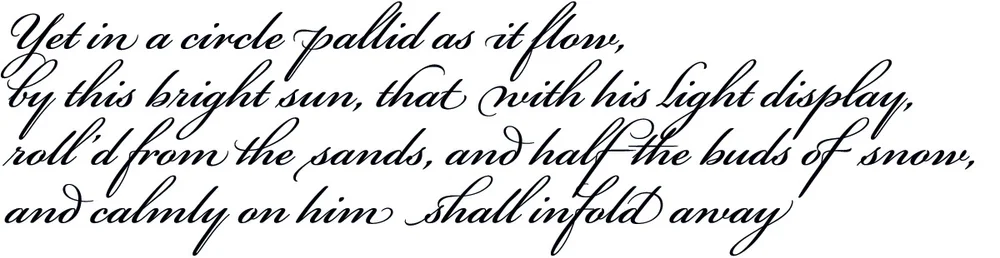

- Emulating various genres: poem, essay, story, news article, etc.

- Copying writing patterns intrinsic to human authors, even those classically acknowledged.

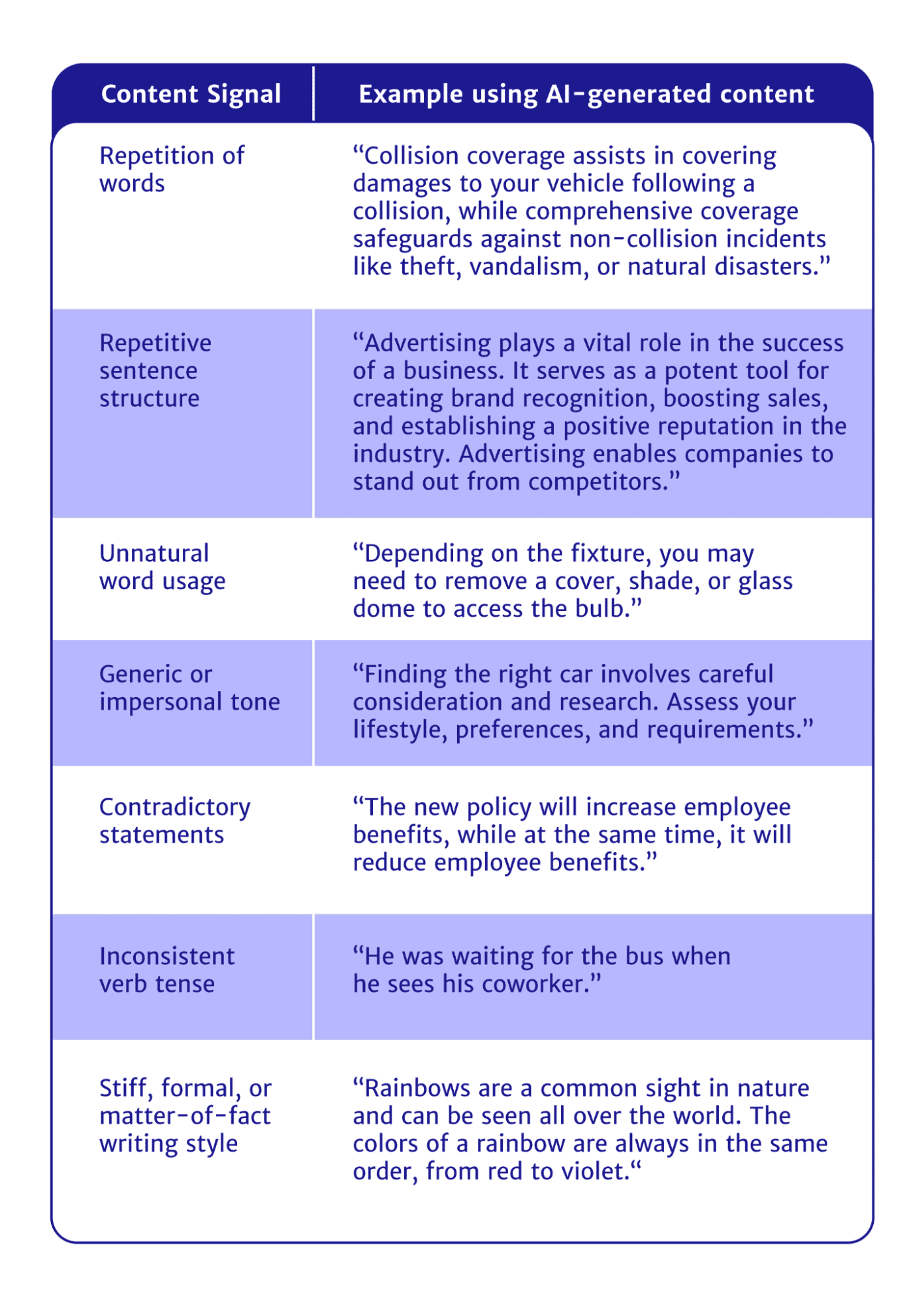

However, GenAI is known to “pollute” its output with signals that give away its synthetic origin. AI text detectors focus on the following parameters:

- Burstiness. This refers to varied phrasing in a given text. AI proves to be monotonous when it comes to sentence length. This has to do with the speech rhythm, which is crucial for engaging the reader and expressing ideas, especially in creative writing.

- Unpredictability. AI is more predictable than a human writer, often failing to provide the sense of excitement in its output. This parameter also partly refers to Temperature, which is responsible for picking more random and flamboyant vocabulary.

- Hallucinating. AI can assert erroneous statements as known facts or create contradictory narratives.

- Structure and wording. GenAI tends to repeat words, use bizarre and unnatural lexemes, sound formal and stiff, and even fail at using verb tenses.

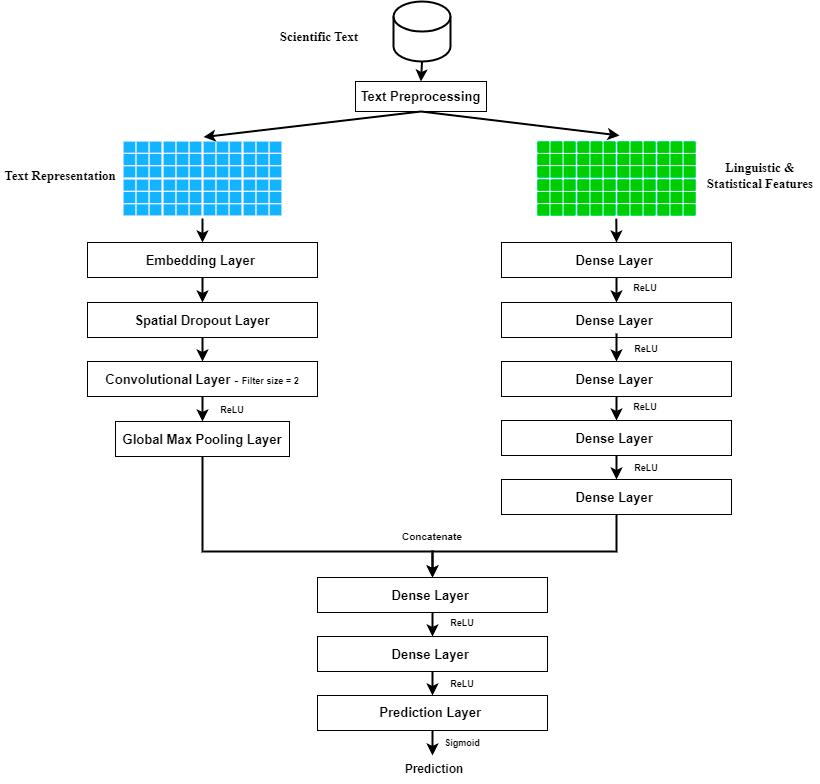

Various neural models have been adapted for AI text detection. Among these are Bidirectional Encoder Representations from Transformers (BERT), multilayer perceptrons, Convolutional Neural Networks (CNNs), and others.

Experiments Comparing Accuracy of AI-Generated Text Detectors

Various studies assessed performance of the AI text detectors. One such 2024 study showed that a median for a free detector was 27.2%; a premium detector achieved better results, but still struggled to identify texts, especially generated by Anthropic’s Claude model.

Another experiment employed an essay on medicine written by ChatGPT 3.5 and rephrased with free and paid-for tools. Among 10 detectors tested, only five of them could detect AI writing with a 100% accuracy, while others basically relied on chance with a 50% accuracy.

A unique 2023 experiment ran six generated and modified texts through 11 detectors. Modifications included paraphrasing, replacing Latin letters with Cyrillic, making grammar mistakes, and so on. A 1960 text authored by the famous orthopedic surgeon John Charnley was also included in the test pool. Each fake text was identified as human by at least a half of all detectors, while Charnley’s text was attested as partly AI-written.

Accuracy of Non-English Detectors

It is reported that detection models like XLM-R — based on self-supervised training for multilingual understanding — fails at detecting AI and human-written texts in languages it wasn’t trained to understand before. It is also assumed that detectors are trained monolingually in most cases, which can undermine fairness and bring in bias when evaluating authors who prefer “non-standard linguistic styles”.

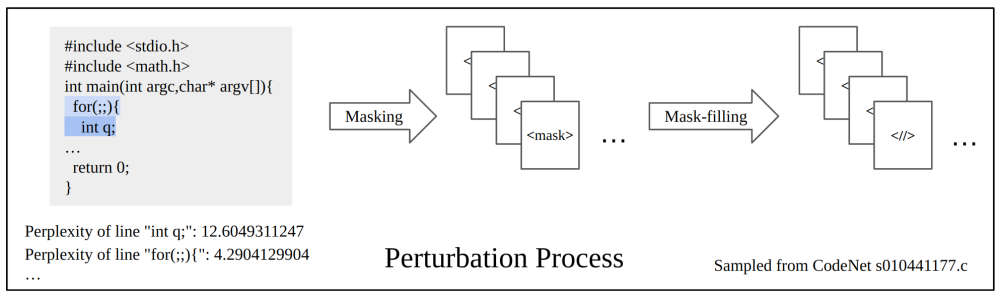

Detecting AI-Generated Code

A method of detecting AI code focuses on three parameters: perturbation, scoring, and prediction. Since generated code displays a lower perplexity score, it doesn’t really benefit from perturbations caused by an AI. This makes it possible to introduce a scoring scheme, which gives a higher position to the generated pieces of code, judging by their variation of perplexity.

Trends in Detecting AI-Generated Texts

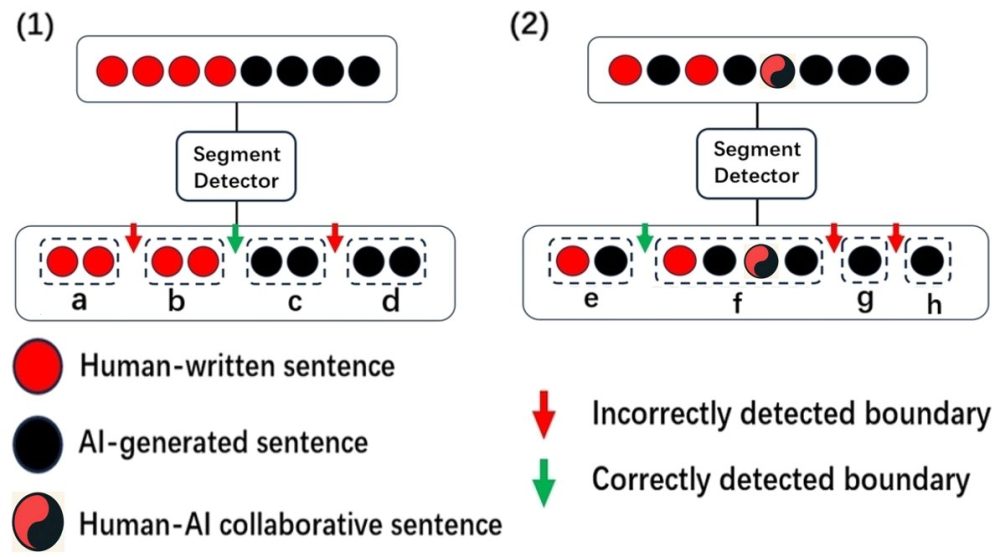

An opinion states that AI-text detectors at some point risk getting stymied, if not impossible at all, since generators will become extremely human-like at some point. An alternative opinion claims that the human population is too enormous to have the same distributions as AIs have. Another research calls to focus on hybrid texts — started by a human and finished by an AI — as they can be quite elusive to expose.

Antispoofing

Antispoofing