General Methods of Bypassing Liveness Detection

Biometric liveness detection is a necessary component in modern verification systems. However, use of biometric verification in home-grade devices (Internet of Things) and smartphones has led to an increase in biometric spoofing, which is carried out via numerous Presentation Attacks (PAs).

In liveness taxonomy, PAs can be categorized based on attack sophistication, execution and extent of damage inflicted to the system. Tools and methods of PAs are mostly predetermined by the type of sensors used in a targeted recognition system. Fraudsters replicate facial features, fingerprints, voice, and even eyes in some cases. Certain systems have proved to be highly vulnerable. As reported, Face ID could be unlocked by using glasses with black dots taped to them, which imitate human eyes. Another experiment proved that the iPhone X could be unlocked using a 3D mask that cost only $150 to make.

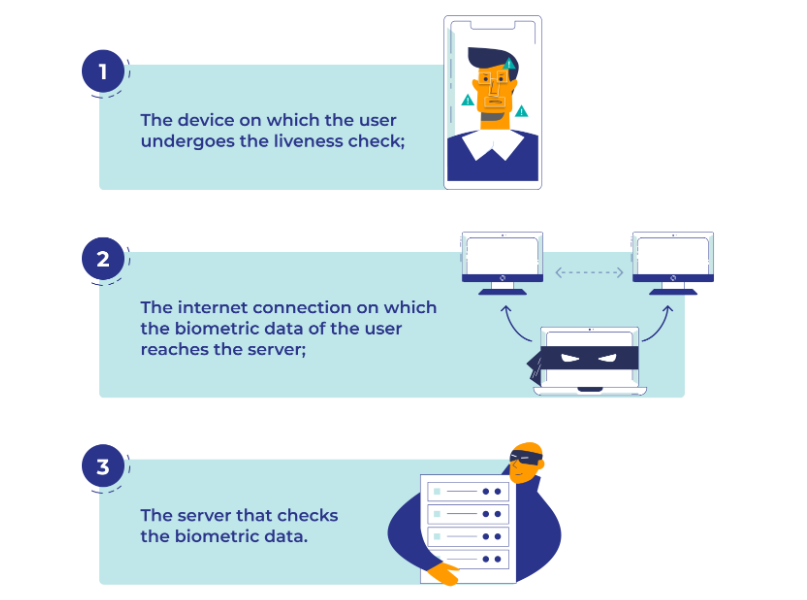

Presentation Attack Instruments (PAIs) also come in many varieties: from printed photos to highly complex face or voice manipulations and realistic masks made from silicone elastomers that can simulate human skin. Another method of PA execution is bypassing or hacking. It employs injection or data-swapping attacks, which allow tampering with the signal received by a sensor or manipulating the biometric data stored inside the system. Such a PA aims at hacked or stolen devices, internet traffic interception and servers that contain data essential for verification.

Face Spoofing Attacks

There are multiple ways and means to perform a face spoofing attack. Typical tools include digital face manipulations, face synthesis from scratch, morphing, 2D/3D masks, cutouts, etc. Certain methods may include a complex algorithm that can successfully circumvent liveness detection.

A notable Youtube channel, White Ushanka demonstrated spoofing of different digital onboarding platforms. One example involved the following steps:

- Synthetic face. To obtain a picture of a nonexistent person’s face a number of generators are used: Thispersondoesntexist.com, 100,000 Faces, Virtual Models by Rosebud AI, Generated Photos, and others.

- Face swapping. Then, the synthetic face is placed on a random individual’s photo to make it look more believable. This can be done with either a traditional software like Photoshop or a mobile editor like FaceApp, B612, MSQRD, Face Swap Live, etc.

- Polishing. The photo undergoes extra doctoring to make it look more organic and erase visible artifacts left by editing.

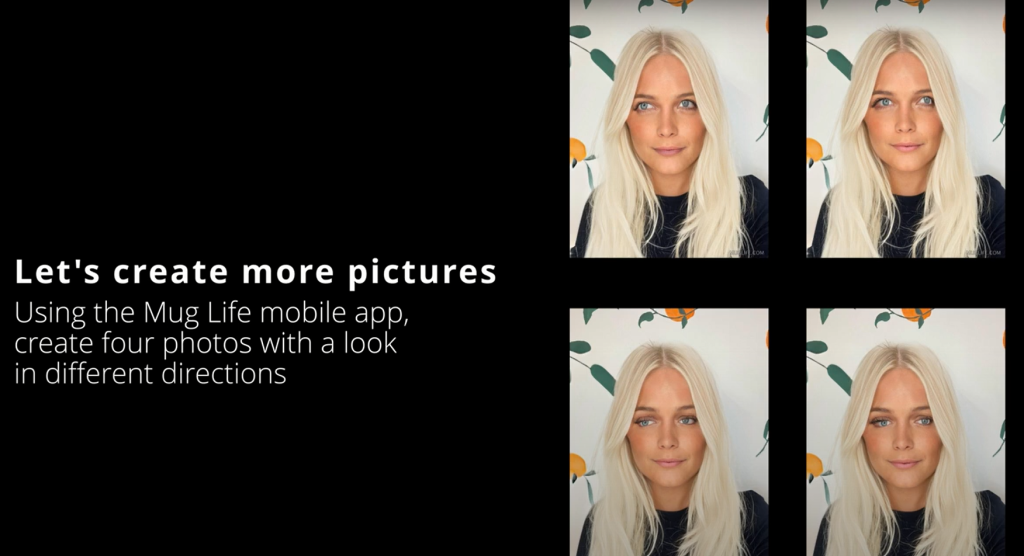

- Multiplication. At this stage a few more copies of the fake photo are created with different expressions, eye directions, or head positions. The step is necessary if the security system is challenge-based and requires the user to perform a task like blinking. This is achievable with apps like Mug Life, Kaedim Platform, Colorful Studio Beta, etc.

- Fake ID. The photo and its altered copies are placed on an ID template.

- Onboarding. An application like Digital Onboarding Toolkit is downloaded to legally register a fake document. A fraudster will perform all actions required by the active verification system simply by presenting the altered copies of the main spoof photo to the camera.

- Completion. The onboarding system will recognize and register the fake document, as demonstrated during the experiment.

However, success of this method greatly depends on which liveness detection techniques are utilized by a system. State-of-the-art detection systems are capable of effectively spotting 2D objects that lack depth and other natural parameters of a human face.

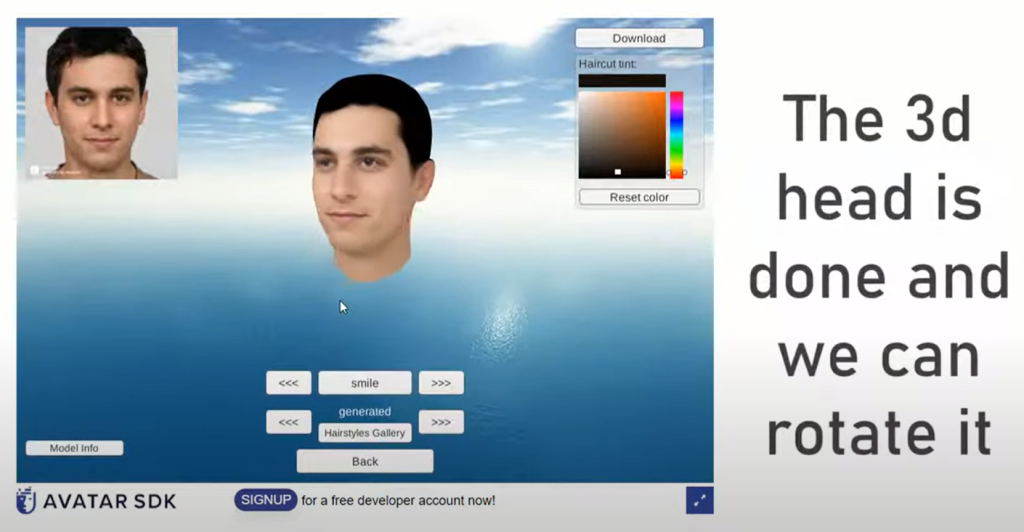

A different technique also employs a synthetic face, as well as Avatar SDK for sculpting its 3D copy and OBS virtual camera to perform an injection type attack. However, this method does not directly target a liveness detection system and is more dedicated to backend weaknesses. Facial Antispoofing Wiki classifies the following attack modalities:

Voice Spoofing Attacks

Voice spoofing, is currently considered as the most effective fraud technique as it is simple to orchestrate, relies on social engineering, and still remains hard to detect. It's responsible for a variety of threats from KYC spoofing to CEO fraud.

Commonly, there are two attack scenarios: speech synthesis and speech conversion.

Scenario 1. Fraudsters employ a voice-cloning tool, based on deep learning, to imitate the target’s voice. After ample training time, such a tool can produce a fairly realistic output even picking up subtle nuances of a target’s speech that imitate liveness: accent, intonation, vocal range, tempo-rhythm, and so forth. Such attacks are made even easier since many voice-mimicking tools are freely available online. A well-known example is CyberVoice (Steosvoice), which was used to mimic Doug Cockle’s voice acting for a Witcher 3 fan mode. Similar solutions include Replica, Resemble.ai, Speechelo, Adobe Voco (unreleased), and others. The training samples are usually taken from the target’s social media or can be recorded during a phone call.

Scenario 2. Voice conversion allows changing vocal characteristics of an uttered phrase without altering its linguistic content. In other words, a malicious actor can disguise their voice as someone else’s to convey any given message.

At least two instances of successful voice spoofing attacks have been reported. The first documented incident took place in 2019. The target was a British energy firm manager who received an ‘urgent’ call from the company’s CEO residing in Germany. During the call, the manager was instructed to wire €220,000 ($231,583) to a Hungarian bank account. It can be noticed that the realism of a fake voice was supported by the unexpected call and overall stressfulness of the situation. Saurabh Shintre from the security company Symantec commented that "When you create a stressful situation like this for the victim, their ability to question themselves for a second <...> goes away".

The second incident resulted in more serious financial damages with an unnamed U.A.E. company losing $35 million. The attack algorithm was somewhat similar whereby malicious actors replicated the voice of a company director residing in Dubai and called a Hong Kong bank to confirm an acquisition to approve a multimillion transfer. Interestingly, the attack was backed with a number of false emails, allegedly coming from the company’s legal representative. Classified by the FBI as Business Email Compromise (BEC), the tactic was in use years before deepfakes became publicly known.

Fingerprint Spoofing Attacks

A nontrivial stunt by a German computer scientist Jan Krissler showed that a person’s fingerprints can be copied from a distance and then recreated both digitally and physically.

The first instance took place in 2008, when Krissler managed to obtain an index finger print of then Interior Minister Wolfgang Schäuble. It was captured from a photo of a water glass that he used during a conference at the University of Humboldt. The photo was then digitally tweaked and published by a hacker association Chaos Computer Club both as an ink-on-paper image and as a film of flexible rubber. According to Krissler, he bypassed 20 fingerprint readers using the same rubber contraption with his own fingerprints stored in it.

In 2014, Krissler pulled an even more refined stunt when he combined a couple of close-range high-definition shots of the Germany’s defense minister Ursula von der Leyen (namely her hands) and a fingerprint identification software called VeriFinger. The purpose of these stunts, however, was to prove that biometrics aren’t always safer than a user-generated password.

Deepfake Attacks

To an extent, voice spoofing is a type of deepfake attack as it employs deep learning for realistic speech synthesis. However, the term ‘deepfake’ mostly implies fabricated videos featuring resemblance of real people or a live video stream, in which a person's appearance is exploited.

This attack type is envisioned in detail by many researchers. However, real-life incidents involving deepfake attacks either weren’t documented or remain undisclosed. Partly, this could be explained by the fact that video deepfake attacks are cost-demanding and aren’t always efficient, especially when aimed at real people — context awareness can be used for revealing deepfakes, according to an MIT’s study.

A serious threat represented by the deepfakes is misinformation. As reported by World Economic Forum, deepfakes transposed from "a technology that began as little more than a giggle-inducing gimmick" to a serious political force that can predetermine elections through AI-powered fakery. For example, deepfake allegations triggered civil unrest in Gabon, which nearly resulted in a coup d'état.

A broad opinion states that deepfakes can be used for creating a false narrative apparently originating from trusted sources, which can be broadly applied to the criminal spectrum. Schemes such as phishing or identity fraud can greatly benefit from it. Another threat is reputation manipulation, which can result in tremendous losses for an individual or an organization. For instance, a fake statement on behalf of a certain company or its CEO can lead to collapse in its stock value.

FAQ

Were there any successful cases of spoofing attacks and bypassing biometric liveness detection?

A number of spoofing attack instances were successful in real life.

Spoofing attacks happen on a regular basis — ID.me service has reported 80,000 facial spoofing instances that occurred in 8 months from 2020 to 2021. In scientific literature biometric attacks are mostly presented as experiments in the controlled environment.

Often, Presentation Attacks (PAs) are demonstrated by independent researchers or laboratories online as in the case of iPhone X being spoofed with a mask.

Real-life cases of malicious presentations are exposed much rarely. A real successful face spoofing attack against ID.me resulted in $900,000 being falsely claimed by a fraudster.

Is it possible to unlock an iPhone with a spoofing attack?

Several experiments proved that the iPhone is susceptible to facial spoofing.

Smartphones are prone to spoofing attacks as some experiments show. During one of them, researchers printed a 3D mask, which resembled an owner’s face. As a result, a number of gadgets identified it as a real face (got spoofed).

A similar experiment took place in 2017 when a Vietnamese cybersecurity company unlocked iPhone X. Face ID was bypassed with a printed mask costing only $150 to make.

As practice shows, smartphones aren't the only vulnerable systems. In 2019, an experiment hosted by Fortune demonstrated successful spoofing of airport and facial payment terminals. 3D masks were used as Presentation Attack Instruments (PAIs).

What type of biometrics is the easiest to spoof?

There is no definite answer as every biometric modality has its own weaknesses.

It’s difficult to select the most ‘spoofable’ biometric system as all of them have weak and strong aspects. It’s unquestionable that every solution can potentially be bypassed as malicious actors keep improving their techniques.

However, it is assumed that active liveness detection is somewhat more vulnerable to spoofing attacks than others. This reasoning can be explained by the fact that active or challenge-based systems reveal their own work algorithms by giving prompts to the user.

In turn, these prompts can be used for reverse engineering. And passive liveness detection remains a ‘black box’ system as its algorithms function in the background mode.

References

- Face spoof detection test by Forbes's Thomas Brewster

- A review of iris anti-spoofing

- Masks, Animated Pictures, Deepfakes…—Learn How Fraudsters Can Bypass Your Facial Biometrics

- Hackers just broke the iPhone X's Face ID using a 3D-printed mask

- Materials used to simulate physical properties of human skin

- Spoof of Innovatrics Liveness

- Random Face Generator (This Person Does Not Exist)

- Spoof BioID with easy generated deepfake face

- CyberVoice

- An artificial-intelligence first: Voice-mimicking software reportedly used in a major theft

- Fraudsters Cloned Company Director’s Voice In $35 Million Bank Heist, Police Find

- Business Email Compromise

- Deepfake used for altering a music video

- Deepfake Detection by Human Crowds, Machines, and Machine-informed Crowds

- How misinformation helped spark an attempted coup in Gabon

Antispoofing

Antispoofing