What Is Generative AI?

Generative AI is a type of artificial intelligence based on deep learning. Its purpose is to produce various media — text, music, video, images, code — that are synthesised from scratch. However, while bearing a glimpse of novelty, GenAI’s creations cannot be made without large training datasets and, therefore, are compilatory in essence.

What Are the Main Models of Generative AI?

The most prominent types are:

- Diffusion.Used for image/video and 3D generation, inpainting, computer-vision and LIDAR.

- Generative Pre-trained Transformer and LLMs. Text and code generation, translation, chatbots.

- Variational Autoencoder. Realistic image generation.

- Generative Adversarial Network (GAN). Video/picture synthesis.

- Autoregressive Models. Music, voice and audio generation, predicting and prognosing.

The mentioned models also serve as a basis for a variety of numerous “derivative” architectures, including hybrid GenAI: Conditional Transformer Language Model (CTRL), T5, and many others.

Review of Generative Models

A brief review of the existing GenAI models:

- Differentiable Generator Network

This is a model class, which incorporates a differentiable function, which is used for transforming latent variable samples.

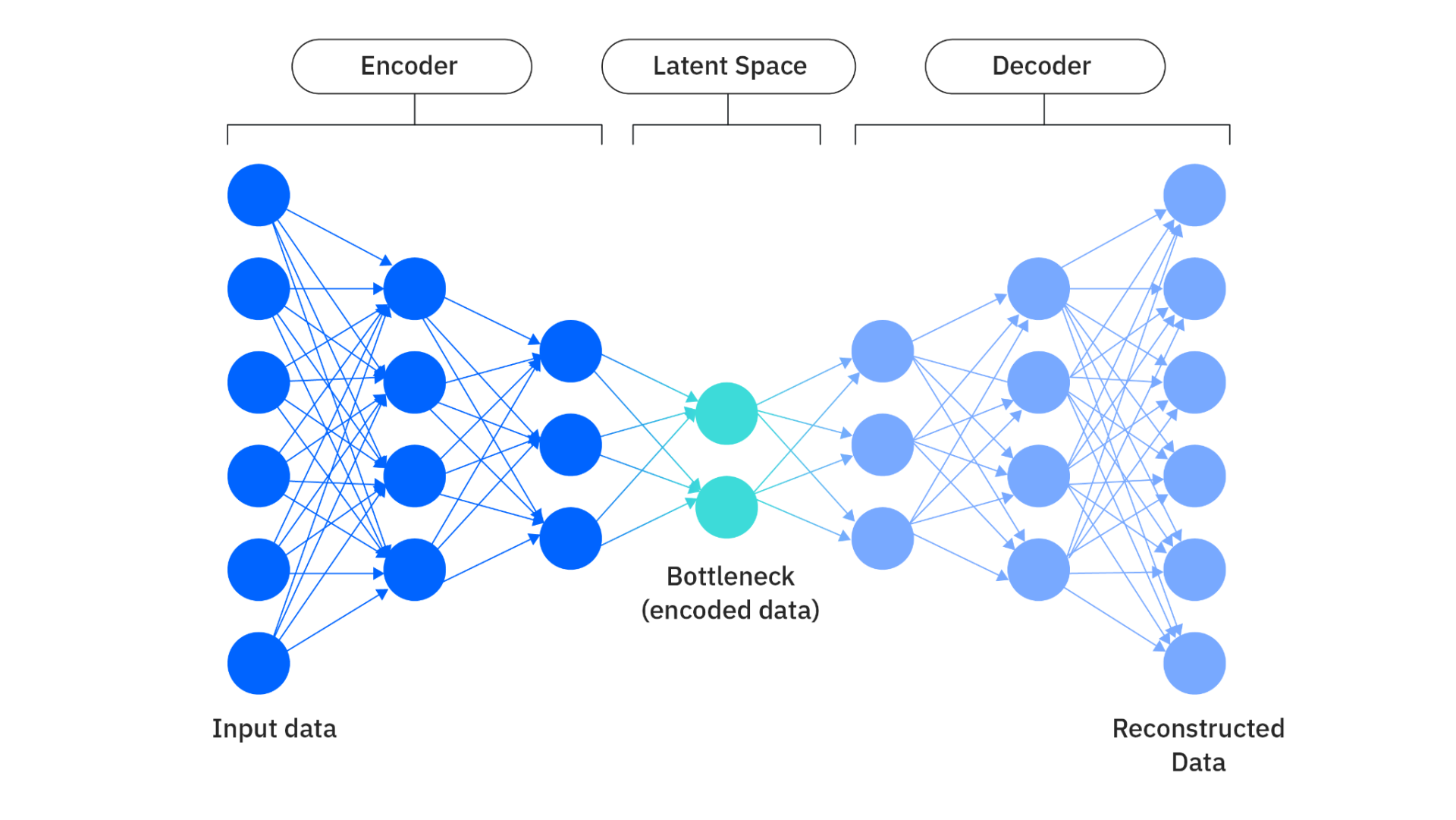

a) Variational Auto-Encoders

VAE’s structure includes an encoder for creating the latent space, bottleneck for simplifying complex data by reducing its dimensions while keeping the most important information intact, and decoder for reproducing the original input sample.

b) Generative Adversarial Networks (GANs)

A GAN consists of two main elements: Generator and Discriminator. The competitive synergy between them stimulates the Generator to create samples as realistic as possible, while the Discriminator checks how believable they are compared to the training data.

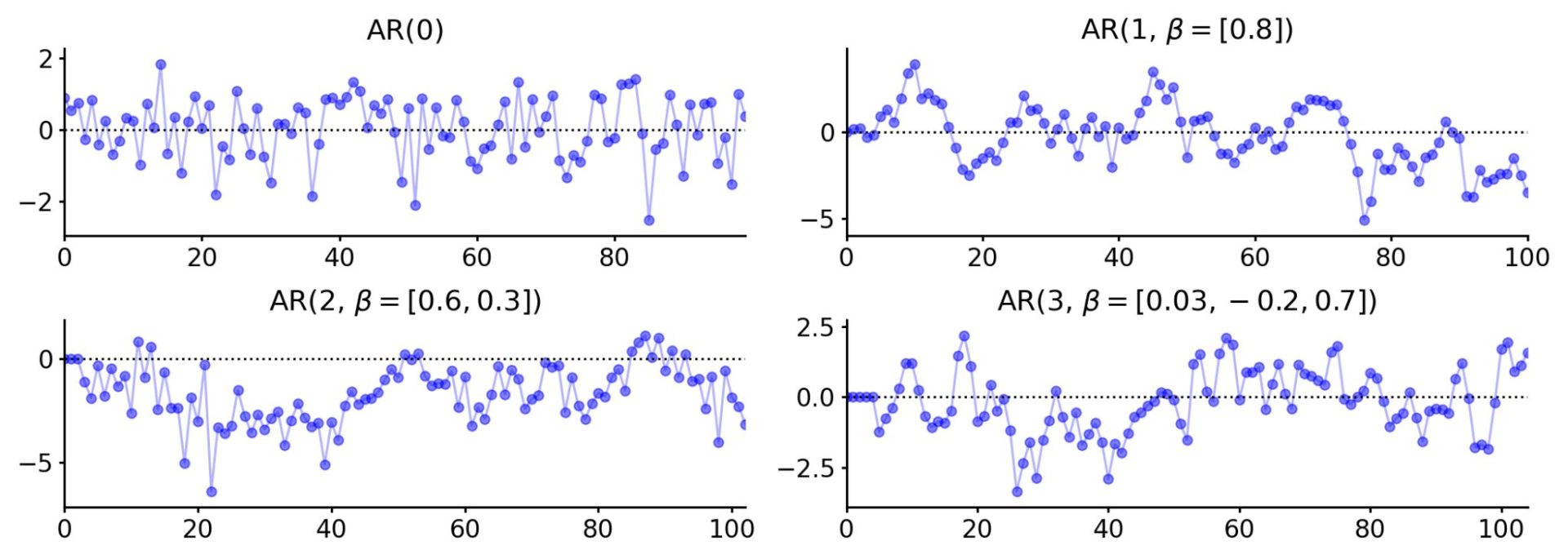

- Autoregressive Models

Autoregressive networks are probabilistic models that predict future values based on past values, while employing no latent random variable. This model breaks down the whole probability with the chain rule of probability to eventually retrieve the product of conditional probabilities.

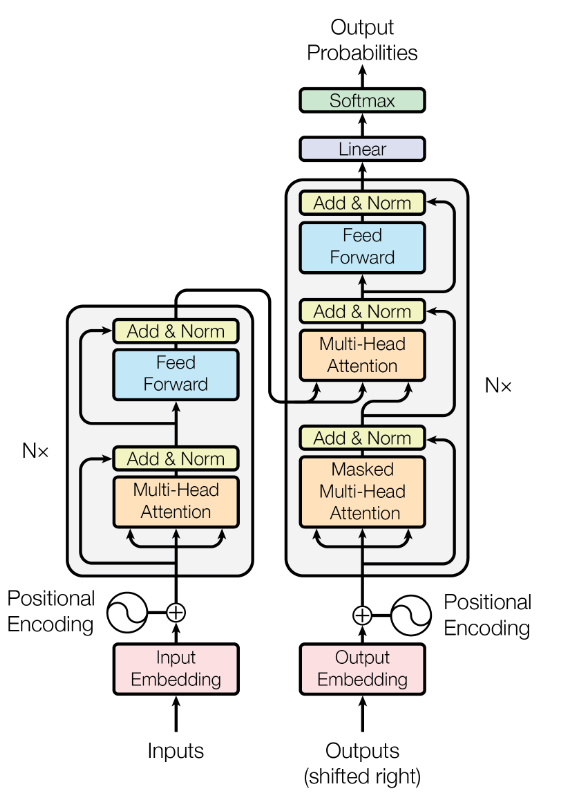

- Large Language-Models (LLMs)

LLMs are based on the Transformer architecture for the most part, hence the name of the Generative Pre-trained Transformer (GPT). This model type consists of the encoder-decoder pair that provides symbol representations mapping, output sequence generation, etc.

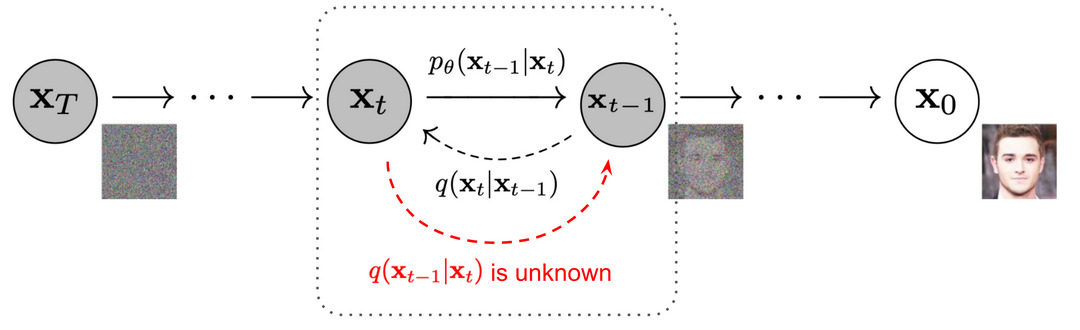

- Diffusion Models

The idea behind DIffusion approach, which is an autoregressive model in essence, focuses on gradual “drowning” of the input data in the Gaussian noise, which leads to its “destruction”. After that, the denoising process begins, which reconstructs the initial input data from the noise.

Other GenAl Models

Alternative approach towards Generative AI include:

- Convolutional Generative Networks

By transposing the convolutional operator it’s possible to synthesize realistic pictures. It employs the data flow going from the picture to the summarization layer, as well as the pooling layer, which helps adding rich and subtle details to the image.

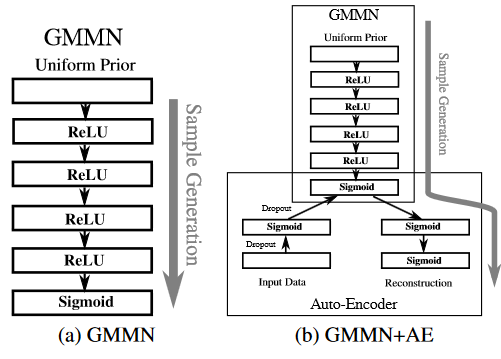

- Generative Moment Matching Networks (GMMN)

GMMN is based on the combination of Maximum Mean Discrepancy (MMD), which is a difference between feature means. It is further trained with the help of backpropagation. Then, it employs an auto-encoder network that synthesizes samples by decoding the MMD-produced codes. In essence, this model is somewhat similar to the GAN architecture, though the MMD criterion here is minimized to avoid the hard minimax objective function.

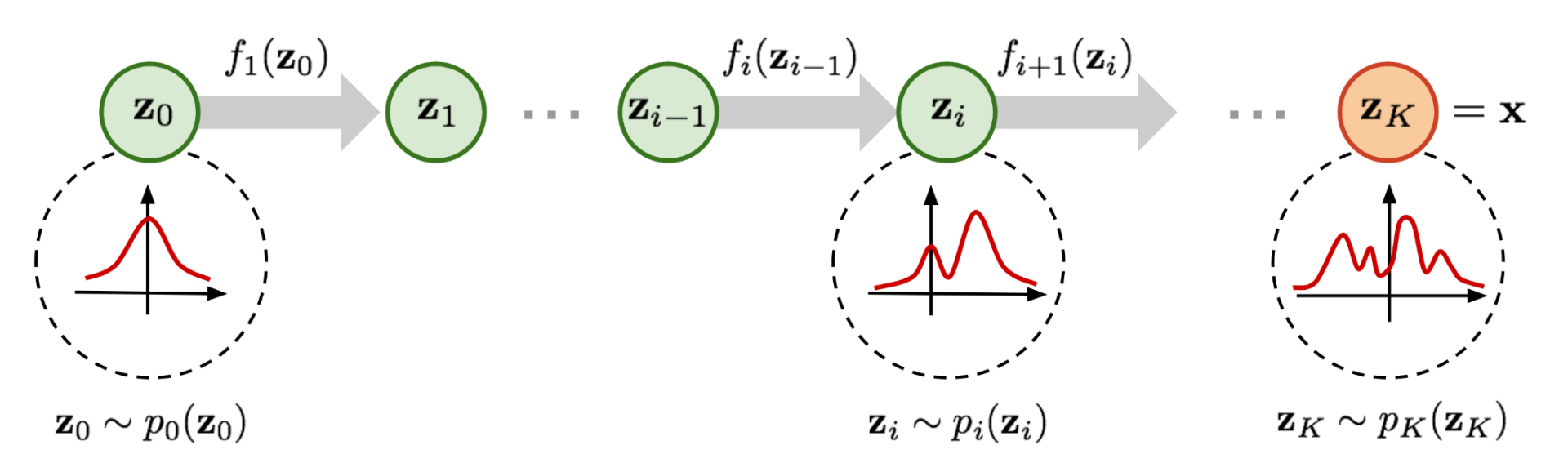

- Flow-Based Generative Models (FBGM)

Flow-based solutions are non-autoregressive, invertible transformation models. They are quite computationally efficient, but often lack density modelling capabilities. To solve the issue, it is proposed to incorporate additional elements: variational flow-based dequantization, self-attention to condition coupling layers networks, and mixtures of logistic distributions and cumulative distribution functions (CDFs) for complex data dependency modelling.

Antispoofing

Antispoofing