Human Factor in Deepfake Detection

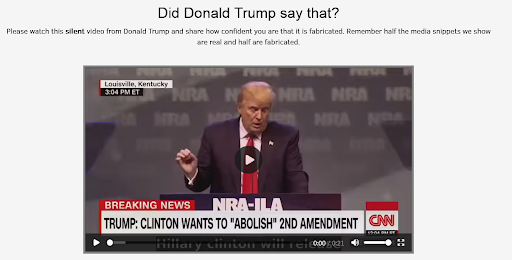

Deepfake detection is a capability of recognizing falsified media and distinguishing it from bona fide visual or auditory data. Deepfake technology was invented in the late 1990s, but became a widespread phenomenon in 2017.

As a result, researchers have voiced concerns about the advent and evolution of facial deepfakes (as well as other deepfake types). Professor Don Fallis points to the epistemic threat that such a technology represents. It implies that viewers can no longer trust their own sense organs and cognitive abilities to tell fiction from the truth.

Other possible threats include liar’s dividend and reality apathy. The former entails that a corrupt person can use deep fake allegations to defend themselves against public outcry. The latter denotes a specific cognitive state, which makes people doubt that an actual event happened in real life.

There have been numerous experiments, which compared efficiency of the human and machine factors in detecting false media, including digital face manipulations. Nearly all of them showed that computer-based detection is far superior.

However, an MIT study showed that people can also successfully detect deep fakes if they are well-informed about the video context. It turned out that 82% of participants were able to beat machine methods in Experiment 1.

Detecting Deepfakes: Methods & Effectiveness

As studies show, human capability of detecting deception is quite close to chance or, basically, blind luck. This applies to various types of manipulations, including text, audio, photos, as well as videos.

There are a number of manual deepfake detection techniques:

Body & face analysis

As noted by facial liveness Wiki, there are 4 main criteria with which an observer has a chance to expose a deepfake:

- Segmentation. A doubt factor may arise if a photo/video demonstrates low quality. Visual artifacts, poor illumination, pixelation and noises can evoke suspicions. (Especially true for cheapfakes)

- Face blending. Blending a few faces into one is a common practice among fraudsters. By receiving "extra" facial features, a perpetrator can successfully be identified as someone else. However, this technique may leave errors visible to a naked eye.

- Fake Faces. A fake face can look extremely authentic and lifelike. However, this level of trickery requires a lot of resources that are unavailable to most fraudsters. As a result, most amateurs produce low quality forgeries.

- Low synchronization. Mismatches between uttered words and lip movements often serve as a cue that the video is not real.

Misalignment and blurring are also errors that give away deepfakes and falsified media. These errors are especially visible in regions where one body part transitions into another: head to neck, shoulders to arms, and so forth. It is a common trait that many video deepfakes share. Additionally, it is possible to observe subtly changing contours of the head, face or the body.

Another "clue set" is proposed to help a viewer identify a synthetic video:

- Facial transformations. Unnatural grimaces, "stuttered" transformations between facial expressions, strange positioning of the nose, eyebrows, philtrum, and other features can indicate a fake.

- Skin texture. In a deepfake, skin may appear overly smooth or wrinkly.

- Shadows. Often, deepfakes can’t realistically imitate light physics, so the face may lack natural shadows.

- Reflections. If a person wears glasses/shades, they may lack or produce overly bright glaring.

- Hair. Absence of hair or its unnatural, "plastic" texture can also reveal a fake.

- Blinking. Strangely rare or overly intensive blinking is a bright indicator.

- Lips. Often, con artists fail to design the lip shape, size or color to organically complement the rest of the face.

Other experts recommend paying attention to the awkward-looking body posture, which results in unnatural head/body positioning, which is one of easiest "symptoms" to spot in a deepfake. Other listed indicators include unnatural body movement, coloring, visual and audio inconsistencies etc.

Video quality analysis

As mentioned previously, segmentation criterion can reveal a forgery. Often, deepfake tools leave visual artifacts and noises, which indicate that the video/ image was doctored. Researchers recommend to pay attention to distortion to shimmer that can come from both the speaker’s face, body, clothes, or objects that appear in a video.

Technical analysis

Even though this method involves technical tools, to an extent it can be employed by a common user who has no access to state-of-the-art technologies like AI or neural networks.

There are several ways and means:

- Slowing down. With a simple application like Shotcut, it is possible to slow down a video or break it into frames. Then, the "suspicious areas" like lips can be zoomed in and studied frame by frame for mismatches.

- Cryptography. Genuine videos can be protected with cryptographic algorithms, digital fingerprints based on blockchain, etc. Absence of this metadata points to possible tampering.

- Image search. A fake photo or video can also be revealed with a simple image search. Making a screenshot of a video and running it via applications like Google Pictures, Picsearch or TinEye can help find the source media.

Moreover, special tools are also being developed to make deepfake detection simple and accessible to regular users.

Psychological Aspects of Deepfake Detection

Human psychology can be both beneficial and detrimental regarding deepfake detection. As a study reports, the liar’s dividend can significantly sabotage the process. The problem is that once informed of an existing deepfake, viewers can become overly alert and suspicious even to bona fide media.

The study also mentions overconfidence as a possible error source. This means that a person can be delusional about their ability of detecting deepfakes. This leads to rejecting genuine media and falling for manipulations.

At the same time, the human brain has developed its own helpful algorithms over the course of evolution. For instance, it is known that seeing a human face — even a random one — triggers the amygdala signals, which make it easier for a human to identify another member of our species.

An important factor for successful deepfake detection is the contextual analysis. As experiments show, it can outperform even AI-powered solutions. (See below).

Experiments

A number of experiments were held to attest human capabilities of deepfake detection.

An experiment hosted by Berkley, challenged the examinees to expose deepfake images. The dataset included real faces that were intermingled with the artificial ones created with a Generative Adversarial Network (StyleGAN2). The two-stage experiment proved that people can expose fakes with just a 50% chance.

A similar study involved a dataset of images doctored with Adobe Photoshop. Results were slightly better with participants showing a 53.5% performance rate. (While the machine approach gave 99% accuracy).

However, an experiment held at MIT brought intriguing results. It claimed that contextual awareness and emotional state can dramatically improve deepfake detection skills. For instance, negative emotions decrease a viewer’s "gullibility".

The study also says that for humans, face processing is somewhat easier due to specific brain regions, mentioning that "the human visual system is equipped with mechanisms dedicated for face perception". In turn this helps with detecting synthetic faces.

Out of 882 examinees, 82% managed to beat the computer methods when analyzing the video pairs. At an average, it took the examinees 42 seconds to respond to the fake and real videos. "Crowd wisdom" with 1,879 examinees also showed decent results with an 86% mean accuracy.

The study also showed that, interestingly, 68% of non-recruited participants outperformed the leading detection model after watching genuine and fake videos featuring political figures. This fact contributes to the notion that contextual awareness plays a tremendous role in exposing fabricated media.

Eventually, the study concluded that ordinary viewers can yield impressive results. Especially if they are given an "ability to go beyond visual perception of faces". This proves that the human factor in detection cannot be entirely dismissed from media forensics.

FAQ

Is human performance in face liveness detection better than machine performance?

Current research indicates that computer performance in liveness detection is far superior than human performance.

Human vs. Computer performance in facial liveness recognition has been a controversial topic in the antispoofing area.

A number of tests were held, which demonstrated that computers dramatically exceed human capacity. For example, ID R&D’s experiment showed rather disappointing results: the error rate at distinguishing bona fide images from fake photos in context of BPCER was 18.28%.

Similar tests held at the University of Berkley and other institutions confirmed that human facial detection rate is rather low and close to chance. At the same time, it is proposed that humans can detect deepfakes fairly easily if they know the context of a given video.

Are there any tests which compared Human vs. Machine performance in face liveness detection?

A series of tests were held at various institutions comparing Human and Computer performance in face liveness detection.

One of the first such tests was an experiment with images synthesized by a neural network, StyleGAN2. Done in collaboration by a number of research institutions, this test served to identify how good people are at telling a synthetic face from a real one. The volunteers showed extremely low performance with only 50% accuracy rate.

A few similar tests provided almost identical results. For example, an experiment with fake images produced using Adobe Photoshop proved that human performance is close to guessing with a 56% performance rate. Despite these results, some researchers believe that completely excluding people from facial and other types of antispoofing is not a correct decision.

Are computers successful in performing face liveness detection?

Empirical data shows that computers are highly accurate and drastically outperform humans at detecting facial liveness.

Human factor in antispoofing protection has been widely discussed. In order to reveal human efficiency at pinpointing artificial faces, a number of tests were held. However, the results were mostly unsatisfactory. It turns out that human performance in context of APCER and BPCER metrics is extremely low and close to blind luck.

Computers were used as "rivals" in these tests. Experiments like the one held at ID R&D showed that machines can spot a fake face in almost 100% of cases. Another test proved that computers are unbeatable when it comes to detecting morphed images: they steadily show a 99% detection rate.

What are the main aspects of successful spoof image detection by a human?

There are four main aspects that help a human in detecting spoofed visual data.

Experts mention that there are four chief aspects that help reveal a spoofed image with a naked eye. Segmentation points to a poor image quality like noise and pixelation. Face-blending is hard to detect, but sometimes it leaves a bunch of visible artifacts that indicate that the image was doctored.

Synthetic faces can look extremely real, especially in deepfake videos. But such finesse is hard to achieve for most fraudsters who are limited to using images of lower quality. Poor synchronization like untimely lip-syncing also leads to self-exposure. Some researchers also mention that humans can excel at antispoofing if they are provided with contextual details.

Is it possible to detect deepfakes manually without special tools and programs?

Typically, manual deepfake detection reveals unsatisfying results.

Manual antispoofing and deepfake detection have so far failed to show any significant results. On average, test participants demonstrate a 50% or lower accuracy, which is basically close to blind luck. Furthermore, human deepfake detection can be hindered by factors such as liar’s dividend and overconfidence. The former occurs when a person automatically sees everything as "fake", while the latter implies that a person overestimates their ability to spot false media.

At the same time, human observers have been known to beat a machine at detecting liveness and deepfakes if they have contextual awareness. This is the one of the reasons why "political deepfakes" often fail.

Can people successfully detect deepfakes?

Human capability at detecting deepfakes has been mostly minimal with a few exceptions.

As demonstrated through a number of experiments and real-life incidents, people are incapable of accurate face and voice recognition when exposed to fabricated media. This is because modern tools like StyleGAN2, Text-to-Image, CyberVoice can create highly realistic copies of real voices and faces.

However, people demonstrate better results at detecting deepfakes when contextual information is provided. It means that if a human observer knows the person they see in a video, they will have a higher chance for successful antispoofing detection. Another type of deepfakes which are easier to detect by people are cheapfakes (video deepfakes that visibly lack quality).

What are the main criteria for exposing deepfakes?

There are various criteria to follow when identifying deepfakes.

Antispoofing protection guidelines recommend observing the following characteristics:

- Media quality. Low resolution, poor illumination, pixelation, etc.

- Fake face. Unnatural positioning of facial features/organs, strange skin texture, misshapen lips, lack of shadows, absence of hair, uneven complexion, etc.

- Poor synchronization. Mismatches in sound and lip movement, unnatural speech tempo, etc.

Additionally, there are online tools that can verify media authenticity. For example, DeepfakeProof is a free browser plugin that can scan webpages and detect fake media. Examples of similar antispoofing technology are Deepfake-o-Meter, Sensity, etc.

How to detect deepfakes manually without software?

Experts indicate a few methods of manual deepfake detection.

Deepfakes are essentially fabricated media, and therefore, sometimes leave clues visible to a human. These include misshapen limbs, head and body contours caused by warping effect. Other prominent deepfake indicators include unnaturally smooth skin, lack of facial hair, motionless pupils, and lip movement that fails to synchronize with the voice.

Antispoofing experts also point to blurriness in the ‘transition areas’. In other words, the body regions, which transition into neck, shoulders, elbows, legs, etc. Distortion and noises in these areas clearly indicate that the video has been digitally altered.

While videos and photos often contain visual cues which can help in manual detection, artificially generated audio creates new challenges. To read about manual detection methods for fake audio, click here.

References

- Professor Don Fallis

- The Social Impact of Deepfakes

- Random Face Generator

- The Liar’s Dividend: The Impact of Deepfakes and Fake News on Politician Support and Trust in Media

- Top AI researchers race to detect ‘deepfake’ videos: ‘We are outgunned’

- Deepfake Detection by Human Crowds, Machines, and Machine-informed Crowds

- Is the political aide viral sex video confession real or a Deepfake?

- Accuracy of Deception Judgments

- Beware the Cheapfakes

- A cheapfake example

- Detect DeepFakes: How to counteract misinformation created by AI

- Philtrum (Wikipedia)

- Norton

- What is a deepfake? Everything you need to know about the AI-powered fake media

- The rise of the deepfake and the threat to democracy

- Detect Fakes welcomes users to detect deepfakes

- DeepTrace Technologies SRL

- Fooled twice: People cannot detect deepfakes but think they can

- Saccade-related neural communication in the human medial temporal lobe is modulated by the social relevance of stimuli

- Synthetic Faces: how perceptually convincing are they?

- Detecting Photoshopped Faces by Scripting Photoshop

- Facebook AI Launches The Large Deepfake Detection Challenge

Antispoofing

Antispoofing