Face Recognition: Effective Methods & Techniques

Face recognition has a wide range of applications: from shopping and entertainment to law enforcement, banking, and surveillance. When used as a tool for biometrical recognition, it should retain a high level of accuracy. This in turn requires integration of a number of highly reliable Face Recognition Methods (FRMs). Facial anti-spoofing, as well as its types, countermeasures, and challenges naturally accompanies this area.

The reliability and accuracy of FRMs is especially vital as facial recognition has become an extremely popular feature of mobile gadgets today. According to a study, the face unlock feature will be used by up to 800 million mobile gadgets by 2024. Apart from this, rapidly advancing technology has led to increased use of face recognition in virtual reality, surveillance, security, and other such systems. Therefore, a need for accurate facial anti-spoofing certification has risen in 2017.

Definition

Face liveness detection and recognition methods are a group of techniques that allow detecting and extracting facial features for further analysis. Besides, it can be based on passive or active liveness detection.

In essence, face recognition is focused on four primary tasks:

- Detection. A target’s face is detected.

- Analysis. The system captures the image of the face for further analysis.

- Image conversion. Facial features are turned into a faceprint — a set of digital data that "describes" the facial characteristics in the form of digital code, vector data, etc.

- Identification. The system matches the captured face with the faces stored in its database.

This detection algorithm is further strengthened using a number of tools including global and local classifiers, principal component analysis, discrete cosine transform, locality preserving projections, and neural networks, etc. Methods of facial recognition are based on cross correlation coefficient, subspace, and face geometry.

Methods Concerning Local and Global Features

Local and Global features form a tandem which allows quick and accurate face recognition. It can be used even if an individual’s face is "distorted" by lighting, aging, viewing angle, and obtruding objects like glasses etc.

Below is a more detailed overview of both methods:

Local Features

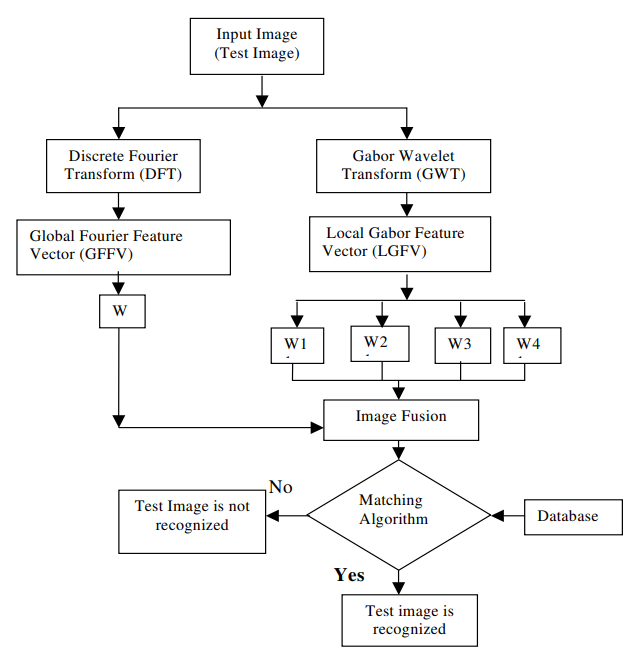

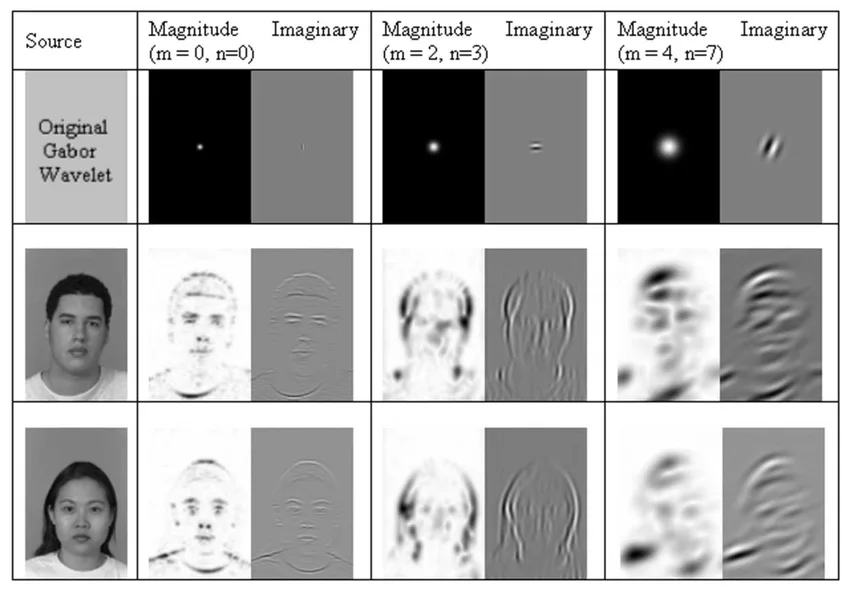

Local feature analysis employs the Gabor wavelets. It focuses on local information in the high frequency range. The face characteristics analyzed by this method are grouped inside a specific face area: mouth, eyes, cheeks, nose, and so forth. This method involves grouping a number of feature vectors spatially. These groups in turn correspond to facial local patches. A group like this is dubbed Local Gabor Feature Vector (LGFV). LGFV serves as a supplement to the Global feature analysis. It conveys more detailed information about a person’s face, allowing the system to analyze the facial regions.

Global Features

Global feature analysis is focused on the human face as a whole. It takes into consideration general data such as the head contour, facial shape, and so on. It is focused on the low frequency range. Components found in this range are pieced together to form a single feature vector dubbed Global Fourier Feature Vector (GFFV).

Linear discriminant

When the system extracts both global and local features, the Fisher’s linear discriminant is applied to: a) Global Fourier features b) Local Gabor features. The vector data is then fused using a region-based merging algorithm leading to the verification phase which finalizes the recognition process.

Primary Face Recognition Methods

Researchers underline the following face recognition methods:

Appearance-based Method

Appearance based techniques are the oldest used facial recognition method: its roots can be traced back to 1970 when a system designed by a Japanese researcher Takeo Kanade was able to detect facial features and estimate the distance ratio between them.

Modern appearance-based methods do the same, with some additional steps:

- The feature vectors of the test and database images are obtained.

- The system tries to detect similarities between the vector images — the minimum distance criterion is one of those.

The appearance-based method has evolved over time and now employs Linear Discriminant Analysis, Principal Component Analysis, Support Vector Machines, Kernel Methods, and so on. Currently, this technique is template-based, meaning that the feature vector represents the entire face template and not just separate facial feature regions.

Subspace-based Method

Subspace-based techniques involves principles such as dimensionality reduction, linear discriminant analysis, and independent component analysis. This method computes a coordinate space, thereby reducing the number of dimensions of a face image. At the same time, it delivers a maximum variance across each orthogonal subspace direction. Subspace-based method helps to detect the vectors that best account for the face image dissemination within the entire image space. It creates a face space — a special group of vectors of reduced dimension. After that linearization, mean vector calculation, covariance matrix and eigenvectors computing, as well as other manipulations are applied to get the end result.

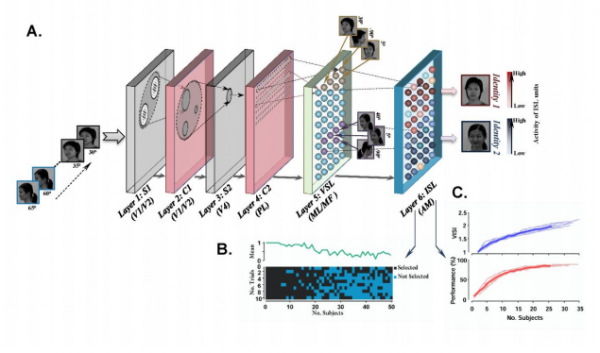

Neural network method

The neural network method employs two networks. The first network functions in the "auto-association" mode. It is used for approximating the face image, which requires a vector with smaller dimensions. The "companion" network plays the role of a classification net — it receives the vector from the auto-association network for analyzing it. Neural networks should be selected individually for each specific situation. A major challenge of this method is that a network requires a large number of model images to be trained with. At the same time, it's a preferable technique for the passive liveness solutions.

Model-based Method

The model-based method is focused on extracting the feature vector. This vector comprises of the face shape, texture, temporal variations, and so on. Moreover, it employs the point distribution model (PDM). It absorbs 134 key points that indicate location of two-dimensional facial features such as mouth, eyes, etc. This method also relies on face localization, texture extraction with the planar subdivisions, texture warping, and image normalization to mitigate the global illumination effects.

Other methods

While the above-mentioned methods can be described either as holistic or feature-based, there’s also a hybrid method in face recognition. The hybrid method commonly relies on 3D images and includes the following stages: Detection, Position, Measurement, Representation and Matching. Since it uses facial depth, the hybrid method can capture and analyze the shapes of nose, forehead, and other features. Interestingly, due to its 3D approach, the hybrid method can even recognize a face in a profile.

Artificial Neural Networks Approaches for Face Detection

Artificial Neural Networks (ANNs) play a significant role in facial recognition, not to mention that they are far superior to facial liveness detection performed by humans. Various methods have been proposed involving multiple neural network types: Deep Convolution, Radial Basis, Bilinear Convolution, and Back Propagation, etc.

Two ANN methods deserve a more detailed overview:

MTCNN

Multitask Cascaded Convolutional Network (MTCNN) is a GitHub library powered by the Python language. Inspired by a study on face detection, this network is capable of locating and returning face coordinates or the pixel values of a detected face. Moreover, MTCNN can locate facial "keypoints" such as eyes, nose, chin, and mouth, etc. MTCNN represents a detected face in a rectangular box. MTCNN proves to be faster when powered by a GPU and the likes of CUDA: it can process up to 100 images per second.

FaceNet

FaceNet is a deep neural network. Its key advantage is that it can learn mapping directly from face images. It then breaks down an individual’s face image into a vector made of 128 numbers. Such a low-dimensional vector is dubbed embedding: the image data is literally embedded into the vector retrieved by the network. Then, these vectors are interpreted through the Cartesian coordinate system. This allows the mapping of an image in question in the coordinate system by using the embeddings. As a result, the system can compare the embeddings of a person with the embeddings of other people stored in a database. FaceNet requires specific training with the help of anchor images and positive/negative examples. (This method is known as triplet loss).

FAQ

Face recognition methods: Definition

Face recognition methods include a vast spectrum of techniques that allow recognition and identification of faces.

In essence, face recognition methods comprise a group of techniques that are capable of detecting a human face and extracting its features for analysis and verification.

There are four main stages of the process:

- Detection. A user’s face is detected.

- Analysis. Facial features and properties are registered.

- Conversion. Features are converted into a digital faceprint.

- Identification. The results are matched to the database of faces.

In some cases Face recognition procedure may utilize various antispoofing techniques to prevent presentation attacks or deepfakes.

What are the main tasks of face recognition?

The primary task of facial recognition is verifying a person’s identity using their face.

Face recognition plays a major role as part of the antispoofing checking. Its main objective is to provide quick verification of a person by recognizing their face.

However, there are other associated tasks that are essential to a successful facial recognition. The system should be highly accurate, secure, and capable of preventing potential Presentation Attacks like face manipulations.

Currently, face recognition is widely used in retail, public security, banking, healthcare, advertisement, law enforcement, and so on. Its primary applications and goals include money laundering prevention, finding missing people, boosting staff’s discipline, etc.

What is the local feature analysis in face recognition?

Local feature analysis focuses on the more detailed information concerning a human face.

Local features are situated in specific facial regions. Among them are named mouth, eyes, nose, cheekbones, ears, and so on. In essence, these features provide a more detailed overview of a person’s face in terms of facial anatomy.

Local feature analysis employs antispoofing tools such as Gabor wavelets, vector spatial grouping, and facial local patch analysis. Local features are situated in the high frequency region as opposed to the Global Feature information.

Together with the global analysis, local feature analysis forms a reliable tandem that provides a high accuracy in facial recognition.

What is the global feature analysis in face recognition?

Global feature analysis focuses on more "general" data presented by a person’s face, including its shape, etc.

Global feature analysis specializes in global facial data. This data includes contour of the head, facial silhouette, proportions, and so on. This information is residing in the lower frequency range as opposed to Local Feature information.

These features are connected together forming a single feature vector called Global Fourier Feature Vector (GFFV). It functions in unison with the Local Gabor Feature Vector (LGFV).

By combining Local and Global methods, we get a more precise antispoofing tool that can detect spoofs with higher accuracy. This is especially important if a face in question is occluded with glasses, facial hair, etc.

Which neural networks are used in face recognition most often?

Neural networks are a major component in facial recognition and liveness detection since they can help verify a person within milliseconds.

Artificial Neural Networks (ANNs) are one of the main pillars in facial recognition. There’s a vast repertoire of ANNs, since each method requires a neural network capable of solving specific issues.

For example Multitask Cascaded Convolutional Network (MTCNN) can locate facial coordinates and key points like nose or mouth and then return the located data to the system. FaceNet focuses on separating a face into a vector and then interpreting this vector data through a Cartesian coordinate system.

Apart from face recognition, neural networks play a significant role in antispoofing as a whole.

References

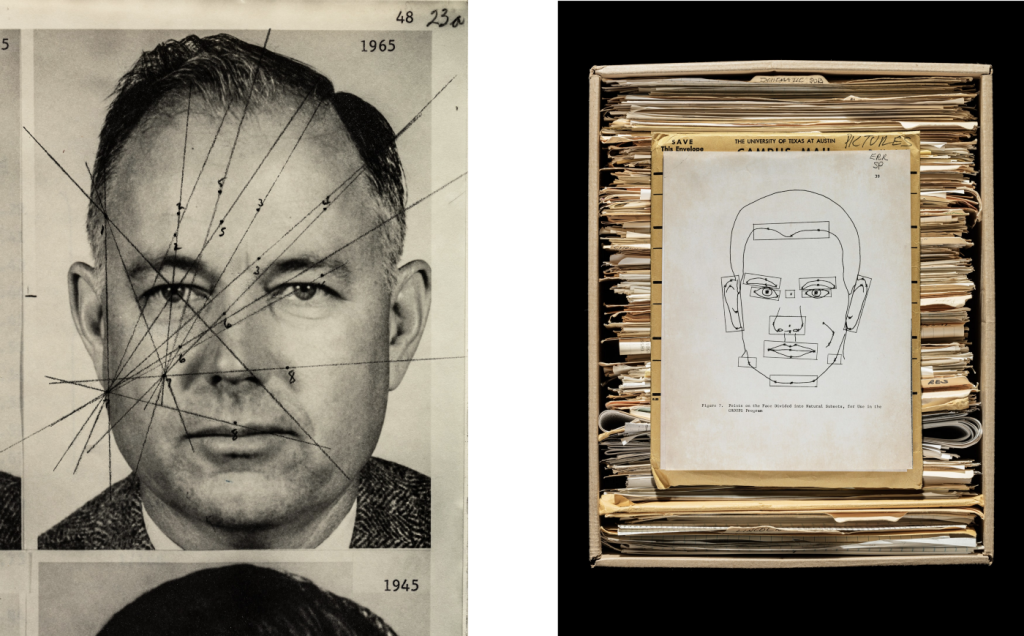

- An early example of a face recognition algorithm

- Biometric facial recognition hardware present in 90% of smartphones by 2024

- We fooled the OnePlus 6's face unlock with a printed photo

- Scheme of the Global and Local feature analysis

- Gabor wavelets

- Local Gabor Feature Vector (LGFV)

- Gabor wavelet used in a facial recognition algorithm

- Fisher’s linear

- A system designed by a Japanese researcher Takeo Kanade

- Face Recognition for Beginners

- Face Detection and Recognition Theory and Practice eBookslib

- A neural network used in face recognition and antispoofing

- The model-based method

- Face Recognition Methods & Applications

- An example of MTCNN performance

- GitHub library

- Face Detection using MTCNN — a guide for face extraction with a focus on speed

- Face image compression by FaceNet

- How to Develop a Face Recognition System Using FaceNet in Keras

- FaceNet: A Unified Embedding for Face Recognition and Clustering

Antispoofing

Antispoofing