Cheapfakes: Origin & Application

Cheapfake — ( a combination of cheap and deepfake) — are a class of fake media that is easy and quick to produce, especially for amateur users. Compared to the traditional deepfakes, cheapfakes do not require highly specific skills such as coding, manual neural network tuning, and post-production using applications such as Adobe After Effects etc.

Deepfakes first rose to public attention in 2017 when an eponymous subreddit was created. Used for producing fake pornography, it remained a privilege of the technically skilled actors. However, in the following years, numerous apps and tools have been designed that allow basic deepfake techniques — such as face swapping — accessible to the broader audience.

Experts highlight that cheapfakes can be highly dangerous for the targeted public. As they take little effort and cost to fabricate, cheapfakes can elevate blackmailing, bullying, defamation, fraud and other threats typically posed by digital manipulations (such as facial deepfakes) to a greater level.

Definition & Overview

In essence, cheapfake (or shallowfake) is a manipulation of audiovisual content performed with a cheap, accessible and easy-to-use software or without using any software at all. Hence, their nickname has emerged as poor man’s deepfake.

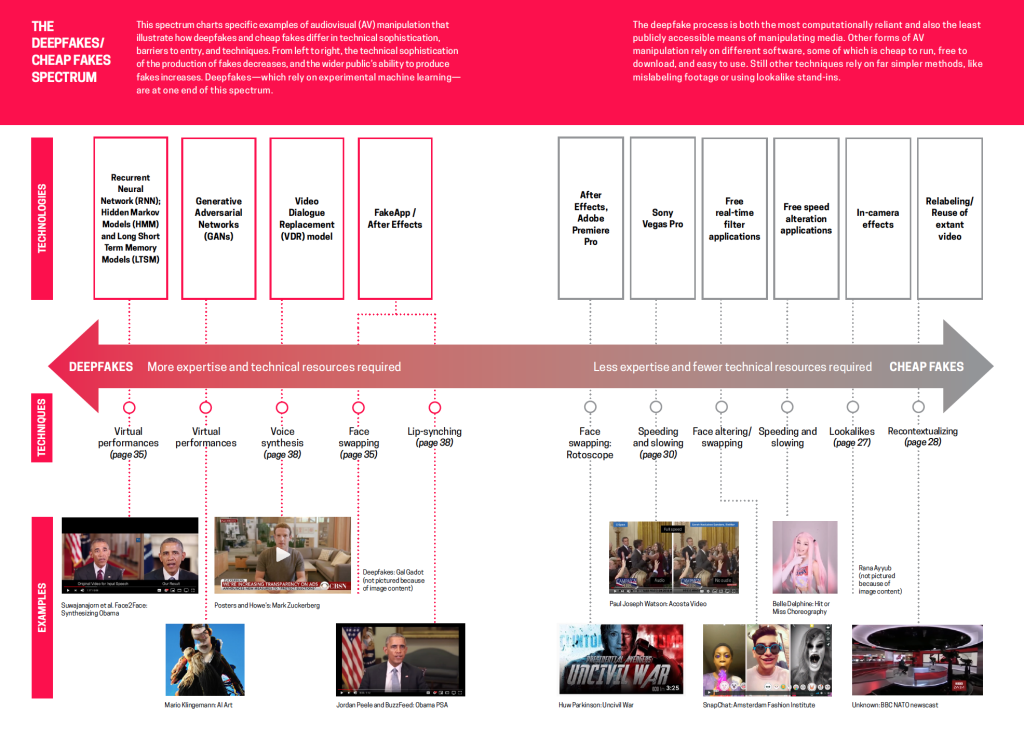

Such a manipulation imply merging two pieces of existing media into one or creating a fully synthetic material from scratch. The term was proposed by authors Britt Paris and Joan Donovan. In their work Deepfakes and Cheap Fakes, they mention that cheapfakes can serve to sabotage the widely accepted norms of truth and evidence.

Among the typical tools used for their production, researchers name Photoshop, video-editing softwares like Adobe Premiere or Sony Vegas Pro, apps equipped with real-time filters capable of altering user’s appearance, and in-camera effects etc.

The most common cheapfake techniques are:

- Relabeling.

- Face swapping. (Often available on deepfake websites).

- Speeding/slowing.

- Footage editing.

One of the most popular cheapfake techniques is recontextualization. This method withdraws a certain element — a person’s statement, gesture or action — and presents it in a completely different context.

An especially notable hazard in deepfake detection lies in the viral nature of the cheapfakes. Paris and Donovan note that media proliferation is easy to orchestrate via channels such as instant messengers. This creates a favorable "breeding ground" for spreading disinformation.

One of the early instances of cheapfake causing real-life consequences took place in 2018. A number of short videos began circulating in WhatsApp chats in India, presenting completely unrelated incidents as actions performed by "kidnappers" and "organ harvesters". This caused outrage in Indian rural areas, resulting in violence against random local residents and outsiders. The hoax was based on the typical recontextualization principle.

The 2020 US presidential election saw a flood of cheapfakes. As reported by CREOpoint, they had been viewed 120 million times during the election campaign. A cheapfake tactic was used against Filipino politician Lila de Limain 2019 with fake videos circulating on online streaming platforms that depicted her involvement in drug dealing.

Political deepfakes and cheapfakes are commonly seen to fail at manipulating public opinion. This can be ascribed to the critical thinking and contextual awareness that help viewers spot fake media of even the finest quality.

Difference Between Cheapfakes & Deepfakes

The core difference between deepfakes and cheapfakes is that the latter does not require sophisticated tools, methods and skills. There are three main groups of differences to observe:

- Creation. Cheapfakes are often manually produced using applications like Photoshop or Movie Maker. They are aimed at restructuring and recontextualizing an already existing media — photo, video, audio. A deepfake, on the contrary, is made with more advanced and automated tools such as neural networks.

- Deep learning factor. Unlike deepfakes, shallowfakes do not rely upon machine/deep learning. This results in rather unpolished and rough-edged media.

- Artificial Intelligence (AI) usage. Cheapfakes mostly do not use AI-powered tools, or employ them to a limited extent. This may be due to the fact that AI requires high computing power that is not easily accessible by shallowfake creators.

While the listed factors remain true, sometimes cheapfakes can also rely on the more sophisticated tools and techniques. In this case deepfake and cheapfake work anatomies overlap to some degree.

Similarities Between Deepfakes & Shallowfakes

There are also a number of traits native to both deepfake and cheapfakes:

- Shared goal. Both types of media aim at distorting reality and producing something that was not done or said in real life.

- Shared basis. Fake media is usually based on a real prototype that can be altered with different tools: from a video editor to a sophisticated CNN.

- Shared dangers. Cheapfakes and deepfakes are equally dangerous when it comes to information security, as both can be used for deception, libel and disinformation.

- AI usage. In some cases, fraudsters can employ easy-to-use AI tools to speed things up. Among them are FakeApp, Zao, FaceApp, Wombo, DeepFaceLab, and others. It’s possible that in the future, tools similar to Adobe Voco will allow unskilled users to produce falsified media of the highest quality at home.

It’s worth mentioning that more similarities may arise as both technologies keep evolving.

Cheapfake Motives, Examples & Techniques

Motives for cheapfake creation can range from entertainment and online "trolling" to serious criminal attempts, as well as deliberate misinformation and slander. Often, cheapfakes are used to cause discord between certain groups or political or social anarchy.

One example features the US wrestler and actor Dwayne Johnson. In an artfully montaged video, he appears to be singing insults to Hilary Clinton, although in reality the song was addressed to his female fellow-wrestler Vickie Guerrero as part of the wrestling act. As a result, it caused a backlash when Johnson was seen supporting the Democrats later in 2020.

Another instance of political propaganda was spotted in 2021. Allegedly, the photo showed a Serbian woman who had to flee Kosovo from NATO’s bombing. In reality, the picture showed an Albanian refugee standing in line to enter Macedonia during the Yugoslav wars.

Mostly, simple techniques are used for producing cheapfakes:

- Editing. It includes rearranging frames and audio clips, as well as splicing or adding foreign elements, replicating, rotation, etc. It’s used to make it appear as if certain things, that never happened in reality, were said or done.

- Face swapping. Achieved with simple AI algorithms or tools like Photoshop, it allows extracting and "putting" a target's face on another person. This method is often used by fraudsters who aim to spoof biometric systems.

- Tempo alteration. It involves speeding or slowing video footage.

- Photoshopping. Doctoring images through cutting, etc.

- Recontextualizing. Extracting a certain element from media and delivering it in a completely different light/context.

Even though some methods are well-known — like photoshopping — they are still widely used by the fraudsters.

Cheapfake Detection Methods

A number of effective cheapfake detection methods are proposed:

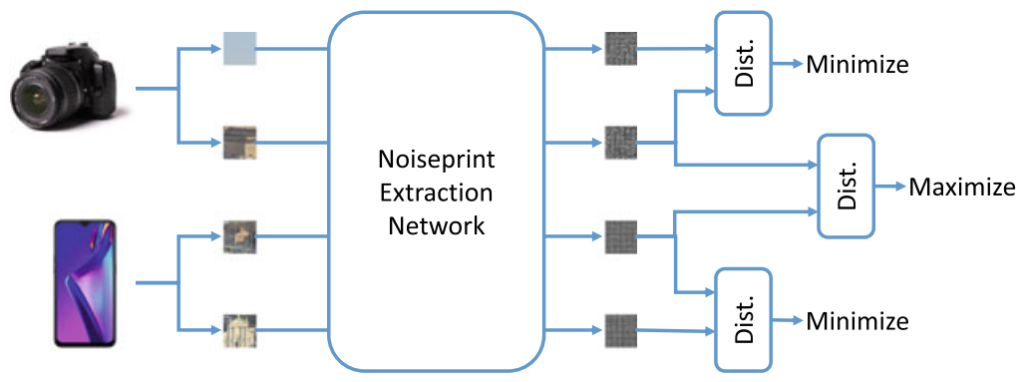

- Camera fingerprint. When a camera acquires an image, it leaves some artifacts that are unique to each model, like photo-response non-uniformity noise (PRNU). If certain objects in the picture lack these artifacts, then the image has been tampered with. This method involves denoising algorithms, high-pass filters, etc.

- Compression clues. After doctoring, a JPEG image goes through a second compression. In turn double compression or double quantization leaves a number of artifacts that can be used as clues, especially in tampered regions. A similar technique can be applied to videos.

- Editing artifacts analysis. When new elements are inserted into a video or an image, they require additional tweaking to fit into the context. It implies resampling, rotation, replication, coloring, blurring, gluing, and so on. In turn, these procedures leave detectable artifacts.

- Localization. It focuses on the pixel characteristics, rather than an entire image. It includes patch-focused analysis, segmentation-like approach, and detection-like method, which can spot manipulated pixel regions.

- Audio detection. Audio cheapfake detection is based on analyzing the wideband oscillation signals (Gibbs effect). This method can decide whether the audio has been tampered or not, while also differentiating the possible clues from normal transients or other sonic artifacts.

Moreover, some CNNs can be used for preprocessing and denoising or applying high-pass filters to detect evidence. Two-branch method employs a CNN architecture capable of analyzing low-end and high-end data at the same time. Siamese networks can analyze and compare camera-based features to detect splicing, and so on.

FAQ

Is there any difference between cheapfakes & shallowfakes?

Cheapfakes and Shalowfakes are produced using different tools, however, their nature and purpose is basically identical.

According to antispoofing experts, cheapfake is any type of fake media that is produced with broadly available, basic and often easy-to-use tools: audio and film editors, mobile applications, etc. Cheapfakes primarily involve common techniques: splicing, tempo manipulation, editing, recontextualization, and others.

Shallowfakes, unlike deepfakes, do not involve machine learning — their primary tools are video editors. In some cases, shallowfakes can be created with simple-to-use applications that are indeed based on machine learning, but do not require any special training. Antispoofing protection is designed to successfully detect both cheapfakes and shallowfakes.

What are cheapfakes & shallowfakes?

Cheapfakes and shallowfakes are fake media produced using easily available and cheap tools.

According to antispoofing researchers, cheapfakes are a class of fabricated media that is cheap and simple to create. Cheapfakes include photo collages, spliced, slowed, sped or edited in any other way for malicious purposes videos, and so on.

Recontextualization is another commonly used technique for cheapfake creation: it allows presenting an original meaning of an idea, statement, gesture or action in a different light.

Shallowfakes belong to the class of cheapfakes. They are fabricated videos produced without deep machine learning, which makes it easier for antispoofing tools, as well as human observers, to detect them.

What is the difference between cheapfakes & deepfakes?

Unlike deepfakes, cheapfakes are not usually created with machine learning.

In antispoofing, deepfakes and cheapfakes are separated into two different categories. Deepfakes are produced with specialized tools based on deep learning algorithms. Among them experts name Face2Face, DeepFaceLab, Zao, and other methods typically based on Generative Neural Networks (GANs).

Cheapfakes are made with simpler tools available to everyone. Adobe Photoshop, Movie Maker, Audacity and other software for home use can be deployed for producing them. However, in some cases, a cheapfake can be forged using FaceApp or other simple and cheap applications and be used to fool a facial recognition system.

How many cheapfakes are there?

The global number of Cheapfakes is impossible to estimate considering how easy and cheap they are to produce.

Cheapfakes require little effort and time to produce. Therefore, malicious actors extensively use them to fool liveness recognition, among all else. (Especially those who have no access to top-grade equipment, software or have no specialized skills in programming).

Cheapfakes are largely used by online scammers, who forfeit documents, signatures or selfies and verification videos to fool a facial recognition technology. A substantial amount of such Cheapfakes were produced during the 2020 Presidential elections. Thousands of specimens circulate in instant messengers and social media, spreading disinformation for various purposes.

Why are cheapfakes dangerous?

Cheapfakes have been known to pose a serious threat despite their low production cost.

As antispoofing experts report, cheapfakes can cause extreme moral, financial and legal harms. In one incident, a cheapfake-based hoax circulated via WhatsApp in India, claiming that random motorcyclists were potential kidnappers. The hoax resulted in mob anger and several unlawful killings. The recontextualization technique was used to ‘reaffirm’ that claim, which caused the outrage.

Cheapfakes are also used by online scammers for different purposes: from bypassing an antispoof checking system to committing financial fraud. Political cheapfakes also pose a serious threat: according to a report, they can spark a scandal, lead to international hostility, etc.

How are cheapfakes made?

Cheapfakes are the simplest type of fake media in terms of creation.

While used for various purposes — online fraud or facial recognition attack — cheapfakes are extremely easy to produce. They are mostly created with tools designed for average users like image/video editors, text generators, and audio editors, etc.

Montage is a primary cheapfake technique. One of the early examples is a 1983 fake conversation between Reagan and Thatcher, which was assembled from different speech excerpts of both figures. Cheapfakes also involve re-contextualization when a certain phrase or action is withdrawn from the original context and put into another. However, antispoof checking is designed to reveal such post-processing.

How to detect cheapfakes?

A number of inexpensive detection methods have been proposed for Cheapfakes.

There are a few antispoofing techniques that can successfully identify cheapfakes:

- Camera fingerprint. Every camera (including that of a smart gadget) leaves a group of artifacts unique to it, which allows tracing the original footage.

- Editing clues. 'Photoshopping' inevitably leaves traces as well.

- Compression clues. An altered image or video has to go through compression twice, which also creates tampering evidence.

Additionally, cheapfakes frequently have traits that reveal a forgery and are visible to a human eye. For example, a famous "Drunk Pelosi" cheapfake was easily identifiable due to unnatural distortion caused when the footage is slowed down.

Are there any online deepfake or cheapfake detection tools?

There is a variety of online deepfake & cheapfake detection tools.

Deepfake detection apps and platforms are presented by a number of companies and foundations. The most common tools include Sensity, Microsoft Video Authenticator, Deepware Scanner, DeepfakeProof etc. Most of these tools have been designed for detecting fake media doctored with machine learning.

Cheapfakes can be detected using the same tools. Moreover, platforms like Fabula AI, Logically, The Factual, Check by Meedan, and others help to identify misinformation that is often spread using cheapfakes. The Content Authenticity Initiative (CAI) by Adobe also aims to assist Deep- and Cheapfake antispoofing in the future.

Are there any tools to detect the origin of online images?

There is a vast repertoire of image finders online. However, they cannot guarantee finding the original source picture.

As a cheapfake countermeasure, it is often recommended to locate the original image using search engines like Google or Yandex Pictures, Bing, and others. Apart from this, there are specialized image recognition services dedicated solely to finding pictures: Amazon Rekognition, Clarifai, TinEye, and so on.

However, none of these search engines can guarantee that a source image will be found. Content Authenticity Initiative (CAI) seeks to introduce a platform where all media, including pictures, will be protected with ‘indestructible’ metadata that will help find the source and avoid spoofing.

Cheapfakes are also used in presentation attacks, though many fraudsters apply more complex methods. To learn about the different presentation attacks and the methods used to prevent them, read our next article.

References

- Porn Producers Offer to Help Hollywood Take Down Deepfake Videos

- What Are Cheapfakes? Are They Different From Deepfakes?

- A cheapfake image used by the Nigerian scammers

- Deepfakes and cheap fakes. The manipulation of audio and visual evidence

- Re-Contextualization. The Effects of Digital Medias Audiovisual Documents

- The deepfake /cheapfake spectrum proposed by Paris and Donovan

- Deepfake videos could 'spark' violent social unrest

- "Drunken Pelosi" cheapfake created with speed slowing

- The deepfake apocalypse never came. But cheapfakes are everywhere

- A comedy song from ROCK was used as a cheapfake basis

- The ‘cheapfake’ photo trend fuelling dangerous propaganda

- Data-Driven Digital Integrity Verification

- Photo-response non-uniformity noise by Wikipedia

- DHNet: Double MPEG-4 Compression Detection via Multiple DCT Histograms

- A supporting method to detect manipulated zones in digitally edited audio files

- The Scientist and Engineer's Guide to Digital Signal Processing

Antispoofing

Antispoofing